Making a repo is probably a good starting point as I’m interested in joining the discussion and contributing where needed.

I think we should start a concrete discussion about design and first steps inside of a repo with those interested in contributing, that might make things a bit more organized as there are discussions going on across posts on the forums.

Would be awesome if the Apollo integration worked seamlessly with minimongo collections as a local cache store (not just redux). This provides several benefits:

- Since all of our react containers are already reactively wired up with client meteor collections, the transition to apollo is that much easier - we don’t need to re-write any of them. Just fetch data via graphql, apollo inserts it into our local collections, components update accordingly.

- You get the nice mongo style query api to get data out of the local cache.

- You don’t have to learn redux right away, which is quite different from what Meteor devs are currently used to. We use redux for local state already (and minimongo for local db cache), but I suspect some people would appreciate a more incremental move from meteor -> meteor + apollo, with an option to stick with collections.

A lot of people seem love mongoose and now there’s this graffi-mongoose, but I wonder if so much magic is good. And mongo is already JSON, why do we need ORM/ODM, is it a necessary abstraction?

We were thinking of using MongoDB, but now we see a lot of people using SQL. Postgres seems to give you the best of both worlds, because you get SQL DB and it also stores JSON documents.

So you can use Postgres and if you really want an ORM you can use bookshelf, which works with Knex. But regard;ess, you can always build your queries with knex, and avoid too many abstractions.

I’m kind of confused about why using minimongo with Apollo. I thought the point of Apollo and GraphQL is that it has a client-side cache.

I think in that case it’s not a good idea to migrate to Apollo. A big part of its benefits are that you don’t use minimongo anymore, and it is a GraphQL-aware cache. Using Minimongo, you lose a lot of the benefits you get with GraphQL in the first place.

Forgive my ignorance or the dumb question…

But if GraphQL is a generic data representation protocol, then (1) in theory all of that communicated via DDP and stored in minimongo could also be represented using GraphQL queries and mutations.

If (1) is true, then with the right integrations, a user could in theory be using Blaze and minimongo with GraphQL as the underlaying data protocol or be given an option to choose either and even be able to switch later…is this feasible and what are the drawbacks of this approach?

GraphQL’s value is in the query syntax and schema definition - it gives UI developers a great way to express data requirements. It could also be used as a generic transport but that’s not what we are trying to achieve with Apollo.

DDP is a great transport for syncing Mongo to Minimongo - it’s the best for that since that’s exactly what it was designed for. GraphQL is not designed for that.

Make sense, thanks Sashko.

Hmm, perhaps I have to dig deeper into Apollo. We’re using minimongo as a client-side cache only - basically the equivalent of a redux state object, but in this case, the “object” is the minimongo db. By reactive, I only meant that our react components update when we make changes to the local cache (which is what happens if you wire up redux and make changes to the redux state object). So, no Meteor subscriptions, etc - we load data via regular HTTP calls to an api, and insert that data into minimongo. We’re slowly transitioning from meteor subscriptions -> rest api -> apollo (?).

Sure, Apollo takes advantage of a very particular cache format that lets it handle GraphQL queries correctly - you could definitely store that cache format inside Minimongo just because, but I don’t think that would help you since if you queried that Minimongo collection directly you would get some weird stuff. In Apollo you query the cache with GraphQL.

Oh wow! A big lightbulb just went on in my head. I was thinking that Apollo’s GraphQL was just for querying the server. Now everything makes a lot more sense.

I guess that’s what comes from reading random forum posts instead of actually reading the Apollo docs.

The way I thought about it, it would be reworded to: You query GraphQL cache with Apollo Client query fetching API.

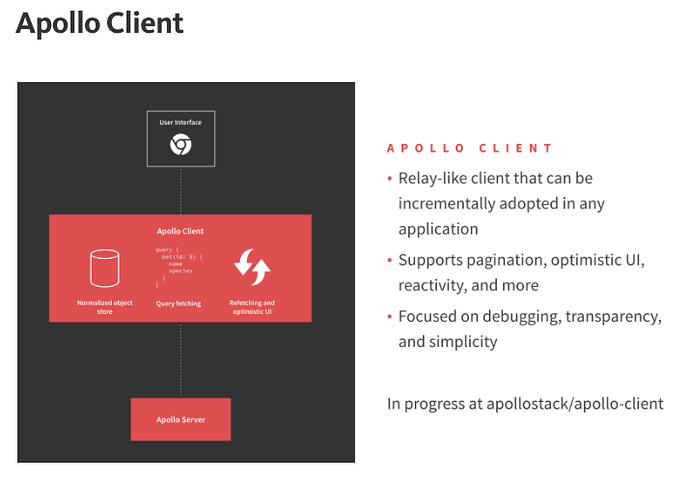

A graph in your Medium article:

The cache we are speaking of is the Normalized object store? The Query fetching is something, if using Blaze, we’d find inside a helper (in theory) and what we’d use to query the object store? What is the Refetching part, is this Reactivity? If it is the Reactivity part, is this applied selectively to a query or field?

And what would the blaze-apollo abstraction do for us on top of the Apollo Client? Would it somehow integrate Tracker into the mix?

Ok wow yes, like @babrahams I did not understand this delightful bit. Gotta dig in a bit I suppose - sounds great! Looking forward to messing around with it in 1.5.

Very little! The whole idea is Apollo Client does basically everything, and the Blaze integration does just enough that putting the data into Blaze feels natural. For example:

- The React integration is a higher-order component that passes data via props

- The Angular 2 integration is a wrapper that gives you an RxJS observable, that Angular handles natively

- The Blaze integration is… probably something that lets you do a query in a helper, and integrates with Tracker to make the template re-render at the right time.

That diagram is pretty vague, but let me try to explain:

- You call

watchQuery, it gives you an observable that initially returns nothing (This is like the initialfindin Meteor that returns nothing while the data is loading) - Apollo calls the server with the query you passed, and puts the data in the cache/store (this is similar to the Meteor subscription)

- The cache/store updates, the query runs against the cache, which gets the data that was just put into the cache (this is similar to the Meteor Minimongo query)

- You get a new result in the observable (this could trigger a Tracker re-run in the Blaze integration)

You can mess around with it now as well! Especially if you are using React or Angular 2 in your app, since those integrations are pretty nice.

@sashko Just to add some more feedback since it might be helpful, when I first looked at Apollo-Client several months ago I also thought it was just a query wrapper with a cache that it used itself… It wasn’t until trying it out and having issues with stale data (cached) and inquiring about forceFetch did I realize you were supposed to query to get the cache and only force fetch when needed.

Perhaps the docs could use something more prominent to state the value prop? I also haven’t read them recently so maybe they’ve also changed a lot since then. (though I need to re-read with the new API changes!).

We’re redoing basically 100% of the docs right now, I better hope the new ones make it clear what the thing does :]

I used to use Redux heavily (and still do in a large app) but now with Apollo Client and the container pattern, using Redux or MobX for a global state is really overkill. Typically I just store a handful of keys for modals, alert banner content, viewer token/id, etc…

Previously (a year ago) it was a really hard to pass down props through many layers so Redux was attractive for that… but with the spread operator you can pass down props with {...this.props} into the child and it becomes much more maintainable (and the child should declare prop types of course to keep maintainability).

Redux was also helpful to take async logic out of the view… but Apollo takes 90% of that away and local operations can go into a parent “data container” to retain that same separation of the view (instead of redux action creators).

Lastly I was going to use Redux for some global state… which works fine, but if you don’t have a complex setup you can use context to provide the same thing… a topmost component that holds global state for all of the UI (note i haven’t used this in prod… yet):

const App = React.createClass({

getInitialState: () => ({}),

getChildContext: () => ({

globalState: this.state

}),

childContextTypes: {

globalState: React.PropTypes.object.isRequired

},

componentDidMount() {

// use this to set global state if you really have to, otherwise use context

window.setGlobalState = (obj) => {

this.setState(obj);

}

},

render() {

return this.props.children

}

})

ReactDOM.render((

<App>

{ require("./routes").default } // bring in react-router routes

</App>

), document.getElementById("react-root"));

// some child container in another file:

const Container = React.createClass({

contextTypes: {

globalState: React.PropTypes.object.isRequired

},

render(props=this.props) {

return (

<Home

someGlobalCounter={this.context.globalState.counter}

/>

);

}

});

Note that you could make your own higher order component so that it works much like connect in redux… perhaps like connectGlobalState(Post) and then globalState and setGlobalState would be available via props.

@SkinnyGeek1010 are you concerned at all that React says using context heavily and for state is an anti-pattern? https://facebook.github.io/react/docs/context.html#when-not-to-use-context

It was also pointed out by mr abramov recently on twitter that using spread operator on props is most-likely an anti-pattern.

I feel like this is the kind of thing we shouldn’t do - quote one tweet from someone talking offhand about what their preferences might be.

@sashko it’s not just someone – it’s from the creator of redux, who is also a core react dev. also, just seeing an example component with spread op:

<Test {...this.props} />

vs props explicity passed in:

<Test a={a} b={b} />

which example looks ‘correct’, easier to test, and debug?

just some food for thought. i guess dev’s can develop things however they want, just want to make sure example code & best practices for something new for a thing like apollo, new devs aren’t pointed in a new ‘best practice’ for apollo from a simple informal forum thread such as this one, which can in turn lead to a lot of code being tossed out in the future, just because it seems like the easiest way to set something up.