I think the information you hold when shared with the rest of us can help us all contribute to taking that ideal meteor testing framework from being “somewhere deep in [your] mind” to being a reality. Currently the information regarding making that ideal meteor testing framework and its challenges is only intimately known by a few (you, the guys behind Velocity, @arunoda, etc). I believe this dream needs to become a reality, and we need to make it a first class priority.

You’ve given a few abstract examples of the challenges faced here, but I think a conversation about the specifics (which already seems to be unfolding here) is in order if we ever plan to achieve this.

What does “injecting code at build level” actually mean (i.e. in depth)?

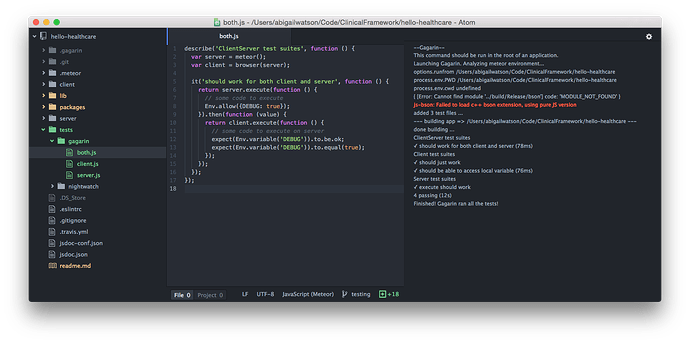

This seems to go hand in hand with “injecting code at build level.” It’s not efficient to build your application to run a unit test which can be more quickly run without being backed without your application–is that the idea? It takes gagarin on average like 30 seconds or something to build your app before your tests can run. Velocity has pulled off some tricks to do this faster by “injecting” code into an already built app? Is that the idea? It would be nice to do unit tests under the same hood as Gagarin, so you’re not using multiple tools for the same class of job.

It seems that the main issue isn’t even hot reloading of code, but rather patching state to the precise exact same “state” it needs to be to make your tests reproducible 100% of the time (along with any fixtures your tests may need). Cuz, we can hot reload code into the runtime pretty easily and basically in an instant for a small number of files changed (even with all the transpilation that goes on in build steps), but there is no guarantee that the application state will be exactly what it needs to be after running the first test, let alone after many tests. This is especially true given the opaque nature of closures, i.e. where state is hidden as private variables within closures. You could take snapshots of all of mini-mongo and put it back to where it was before each test, but it doesn’t mean other code won’t have changed private variables within closures. Would snapshotting and replacing the entire window object do the trick–I’m not sure, is there still artifacts lurking in the runtime?

On a side note, Redux through being unidirectional, functional, and immutable allows for such testing today (so long as you maintain its functional principles). For all apps to maintain these principles in all aspects of its code, it’s a hard pill to swallow, and definitely not possible for non-functional apps built already, and likely may never be fully possible even for new apps unless you wanna embrace the functional approach wholeheartedly. Even tools like React + Redux likely don’t get that write without being somewhat of a “leaky abstract”–something like Cycle JS would likely be a far better option (in pure js land). Even then, it comes at the loss of many of the good aspects of OOP, which–without making this the main topic for debate–is still the best tool for a great number of problems, and I would definitely say is an easier way to learn programming than functional programming (though, perhaps that’s like saying English is easier to learn than Chinese first because I learned English first). Regardless, OOP is definitely not going away and is still the predominant style of coding, so I think we should find a way to offer a lot of what Redux offers in terms of time traveling “debugging” today with traditional coding styles.

I’d like to point out what Tonic Dev ( https://tonicdev.com ) does to quickly run any Node JS code you run in the browser–read this blog post by them: http://blog.tonicdev.com/2015/09/10/time-traveling-in-node.js-notebooks.html. Basically, they use this somewhat new (or at least recently evolved) tool: http://criu.org/Main_Page to snapshot the entire NodeJS runtime, as well as its associated file system, at a lower level, similar to how VM containers are made. They can then roll back the entire application state flawlessly.

I’ve been looking into such things because I refuse to believe that the state of Velocity or the build times of gagarin will hold us back from the true benefits of TDD: basically immediate response and observability of your code. In short, with the current limitations the only benefits we are getting from tests is protection from regressions. While that’s important, I think the upgrade in development flow of true immediate TDD is in a world of its own. Check out Wallaby JS right now if you haven’t:

http://wallabyjs.com

here’s the video tour:

As you can see, you basically have what Chris Granger’s Light Table was only effectively doing with ClojureScript (their glorified JS support never reached much more than a basic JS repl last time I checked). So Wallaby is doing similar stuff where it shows the results of your tests and inline errors within your tests and within the tested code AS YOU TYPE. We could only dream of such things without first getting our build time in order, or rather, workarounds where only changed code can both be patched, while syncing application state back to a previous snapshot.

Being able to code this way would get the full power out of tests. It would lead to a lot more tests, because more often people would start with them, rather than end with them just to catch regressions. In short, without such things TDD isn’t even a productive workflow for Meteor development today. It’s extra work that clients often don’t pay you to do, whose benefits aren’t immediately apparent.

Where my train of thought goes next is whether CRIU could snapshot the browser runtime (and replace it), not just Node JS on the server?? Because even if we were able to implement CRIU for server side testing, the client app still needs to be setup and torn down equally as quickly.

Now that, said, because of React, having to test your code on the client is becoming less and less important. The following project https://github.com/bruderstein/unexpected-react allows you to use JSDOM to render React on the server and test it. In addition, you don’t even need to JSDOM if you want to test React components individually. You can use React’s built-in testing addon. The problem with that though is that it only shallowly tests the output of your React render methods, i.e. for its precise return, not the return of nested child components. You need a DOM to do do that. What that means is if you change how you compose your components, but what’s written to the DOM is still the same, the test based on React’s testing utilities will fail, whereas the DOM-based tests won’t. So that’s where JSDOM essentially puts ReactTestUtils to shame. …So anyway, perhaps we don’t need to snapshot the client if we don’t in fact need it.

Now all that said, CRIU is an extreme example. Bring it up serves the purpose to show that solving these problems–if we make it a priority, and allocate the time to build a robust solution–is possible. We may not in fact need CRIU, but rather just to continue down the path that Velocity took. I’m not super informed about how Velocity paches code (and state). I quickly looked into their code to see how they were building their mirrors, but I think if the information could be shared with the rest of us, the community could devise a better solution.

@apendua @Sanjo can you guys tell us more about “injecting code at build level” and how we can improve that?