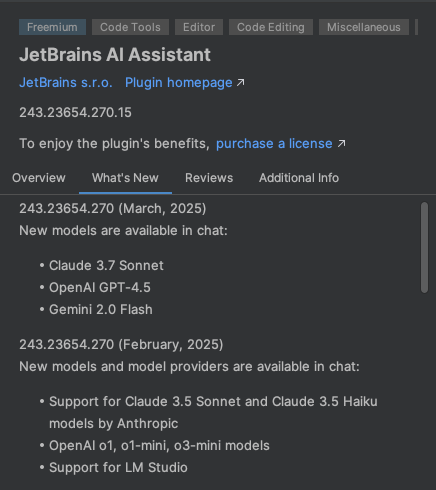

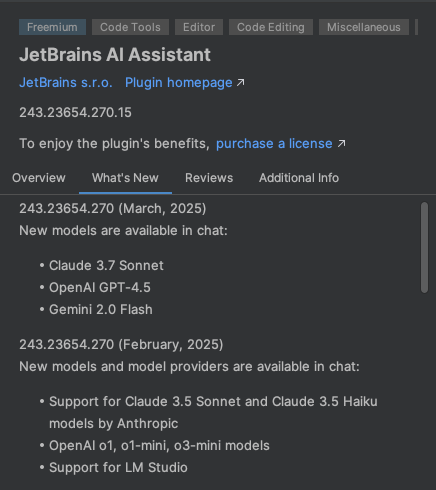

The latest version of AI Assistant in Webstorm has some of the most recent AI models:

The latest version of AI Assistant in Webstorm has some of the most recent AI models:

Did a 5 hour marathon with Claude 2.7 in Windsurf. Doing mostly basic things. Somewhere after 3 or 4 hours it started to loose… what I could only describe as focus. Despite specific rules and everything it started falling back on the defaults before I described them. It also started to become… lazy, not really going through the project and making the changes everywhere it should. Paired with my previous experience it looks to me that LLMs are good for quick straight forward tasks, preferably if they can set it up with all the hype libraries that have a lot of context they can draw on.

Also if there are long running projects like Meteor, you have to be careful which version it is building for.

Do you have control over the context Claude gets? When you work with a LLM in the same chat session for some time and try different approaches it becomes difficult for the ai to keep track of what was good and what was bad. It may help to reset the context and start over with the current state of the project but have the ai forget how it got there. It also helps if you can control what files the ai actually gets to look at. As the project grows it’s better to focus the ai on the files that actually are directly related to the file you want it to edit and not worry about the rest.

I am using continue.dev

It’s a VS-Code plugin that offers a chat with commands that give it its context. So you can keep it focused. You can also tell it to use your entire workspace, but I only ever use it if I run into crashes where I got no clue where to even start looking. You can also choose wich LLM models you use for different Tasks (you bring your own API Keys and can even use local models eg. with ollama). I am using it with Sonnet 3.7 (with 16k budget tokens for thinking) for the chat, codestral for auto completion and voyage-code-3 for vector search (in your code base and in the documentation you supply - you can give it urls to GitHub pages or other documentation pages and it will crawl through them, index the content and make it available to the ai).

It can still get kind of stuck it (or rather you both) it painted yourself into a corner and the approach you chose earlier just does not work for the changes you want it to make.

Overall I prefer to work with the ai as a pair programmer and guide it through the work. The major challenge is to keep up with what you are doing. It just takes so much longer to review the changes than it takes the ai to make them and as long as things seem to work fine it’s just to easy to just rely on it and loose track of what’s actually going on.

And sometimes it’s easier to just write some code yourself than trying to explain what you want to achieve.

Overall, working with ai coding assistants is in some respect a lot like working with a human colleague. If you want them to do exactly what you want you got to invest some time to guide them through it and do some code reviews.

In Windsurf there are memories to remember the important decisions. I guess I will have to be more explicit with saving to memory.

My feeling right now is that LLMs are on a level of beginner programmer that needs constant hand holding.

AI is often really great (and really fast) at doing well defined tasks but generally lacks an overall vision (or at least your overall vision). If you figure out what it’s good at and use it accordingly it can really help. It’s the same with human colleagues. And it’s really fast and cheap compared to human colleagues. And it doesn’t get frustrated If you tell it to make changes AGAIN). And quite frankly I prefer it that way. I want an assistant to do the tedious stuff for me and not a senior Programmer that replaces me (that would probably change though if we had basic universal income ![]() ).

).