I just wanted to add another, maybe obvious to some, observation:

One trap one can fall into is if you have multiple subscribers observing & updating the same data in the database, and maybe updating the state every few seconds (think a multiplayer game for example).

Now if every client is subscribed to the data of all other clients & updates his data every second for example, things escalate quickly (ask me how I know! :D)

Because the number of messages per update interval (let’s say 1 second, just for the lulz) is numClients * numClients, which escalates quickly.

10 players -> 100 messages / second; 20 players -> 400; 50 -> 2500; 75 -> 5625 …

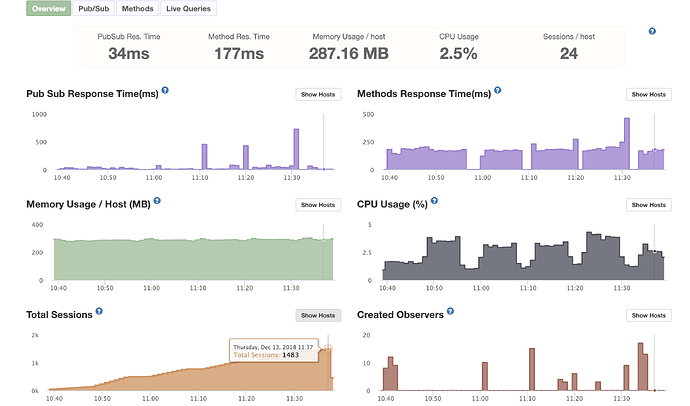

Between 50 - 70 was around the time when our app broke / couldn’t keep up in production with the “naive” implementation.

So this is an example of every app / case being different & having to be considered on it’s own.

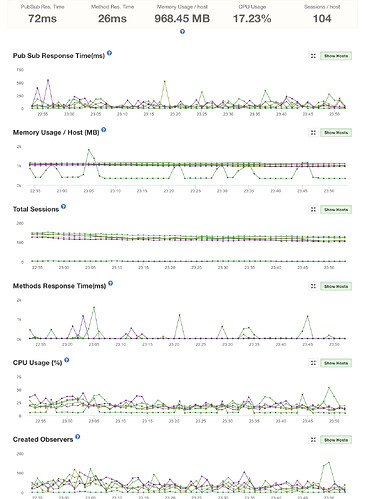

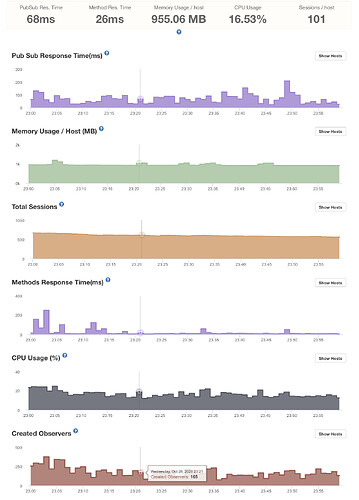

After centralizing & accumulating the data & publishing it only once every few seconds the number of calls is now linear again, (Number of Update Calls / second = numClients + number of updates sent to clients / second = numClients).

So maybe have a look on whether and how the information for each user is maybe interacting with each other. Make sure to only .find() and publish required {fields: [’’]} so the publication isn’t triggered on unnecessary updates & make sure there isn’t a lot of “crosstalk” in regular intervals between clients (use the reactivity only if something actually happened for example).

Also 1000+ users at the same time is already a fair number of users, depending on the use case. Normally the daily users aren’t there all at the same time so even with eg. 10000+ Visitors, you might only have a hundred on the page at the same time, althought hat might differ from case to case too of course.

On the positive side, you might already be very successful with your project + could invest in a little horizontal scaling too!

Best wishes & have fun everyone

(PS: I’d also welcome any performance / memory improvements of course, and also think a kind of testing tool would be great!)