not sure if it fits the purpose though

The only “fame” that one would need is in the pretty new version of the AWS library. Normally we, people working on big projects, read the code before it goes in the project.

This is the package with more “fame” and I think it has been recently updated after a long time while the concept is the same, sign server send client.

https://github.com/Lepozepo/s3-uploader

I didn’t mean it as an offence and I started to look at your code. I am weighing the possibilities. But as you mention “we, people working on big project” I think it’s kind of normal to check the age of the library, number of uses stability, maintenance, activity in the issues, etc… Any library has to start one day, I agree, but I wouldn’t build a project with only new libraries either.

Hey @paulishca

Finally on a new project I started using your library, doing great job, thanks a lot. Left a few question / remarks in the issues on gitlab, also I am ok to post an example simple implementation if you think it is relevant (or example folder?)

Are you considering to publish the package ?

Hi Ivo, the library is great, I use it intensively. I’ll have a look in the repo to see your comments. As I remember, I have some customizations in it and some things left unfinished and this is the main reason why I use it as a private NPM while de code is public. Will take a look at it.

May I ask you how you manage the security on the AWS S3 side ? The permissions side is always a wonder for me

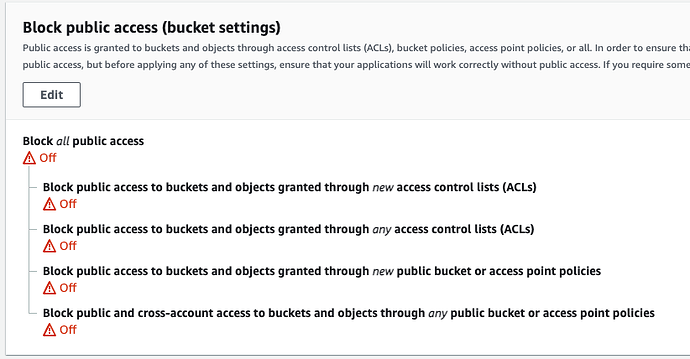

I am really not confortable with this. I don’t know if we should have the " Block public access (bucket settings)" turned on. But if it’s on I can not upload anymore. But I’m getting security warning from AWS. What I’d like:

- to allow upload from users

- have the media read public

It works now, but I feel like nothing is secured. I have the following settings in permissions tab:

Block all public access : off

Bucket policy

{

"Version": "2008-10-17",

"Statement": [

{

"Sid": "AllowPublicRead",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::my-bucket/*"

}

]

}

CORS:

[

{

"AllowedHeaders": [

"*"

],

"AllowedMethods": [

"POST",

"GET",

"PUT",

"HEAD"

],

"AllowedOrigins": [

"*"

],

"ExposeHeaders": []

}

]

ACL is default with bucket owner having objects list, write and bucket ACL, read,write

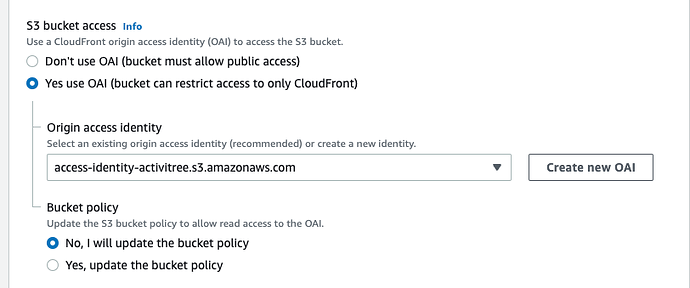

@ivo if you have private documents, you will probably need to use something like ostrio:files. If you just need a CDN, eventually with signed URLs, you drop files in S3 and get them via CloudFront.

When you link your CloudFront to S3, you have an option (in Cloudfront) to only allow access to files via CloudFront. When you check that, the Bucket Policy gets updated and all links to S3 files become useless.

CORS:

[

{

"AllowedHeaders": [

"Authorization",

"Content-Type",

"x-requested-with",

"Origin",

"Content-Length",

"Access-Control-Allow-Origin"

],

"AllowedMethods": [

"PUT",

"HEAD",

"POST",

"GET"

],

"AllowedOrigins": [

"https://www.someurl.com",

"https://apps.someurl.com",

"http://192.168.1.72:3000",

"http://192.168.1.72:3004"

],

"ExposeHeaders": [],

"MaxAgeSeconds": 3000

}

]

Bucket Policy:

{

"Version": "2008-10-17",

"Id": "PolicyForCloudFrontPrivateContent",

"Statement": [

// Next one, by allowing Cloudfront, disallows everything else

{

"Sid": "1",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::cloudfront:user/CloudFront Origin Access Identity xxxxxxxxxxxxxx"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::your_bucket/*"

},

{

"Sid": "S3PolicyStmt-DO-NOT-MODIFY-1559725794648",

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::your_bucket/*",

"Condition": {

"StringEquals": {

"s3:x-amz-acl": "bucket-owner-full-control",

"aws:SourceAccount": "xxxxxxxxx"

},

"ArnLike": {

"aws:SourceArn": "arn:aws:s3:::your_bucket"

}

}

},

{

"Sid": "Allow put requests from my local station",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::your_bucket/*",

"Condition": {

"StringLike": {

"aws:Referer": "http:192.168.1.72:3000/*"

}

}

}

]

}

Thanks a lot for this feedback.

I didn’t connect cloudfront. I manage cache directly from the S3, what’s added value of adding cloudfront other than cache ? distribution of files ?

Do you have somewhere a tutorial to connect cloudfront to s3 or is it pretty straightforward

Edit: and do you have to change how your file are accessed if you add cloudfront ? I am getting the uploadUrl from the s3 upload, will I need another url ?

Cloudfront is a lot faster in all selected regions, on high volume is much cheaper. Also make sure you save progressive images instead of interlaced where possible. They tend to “come forward” rather than load from top to bottom.

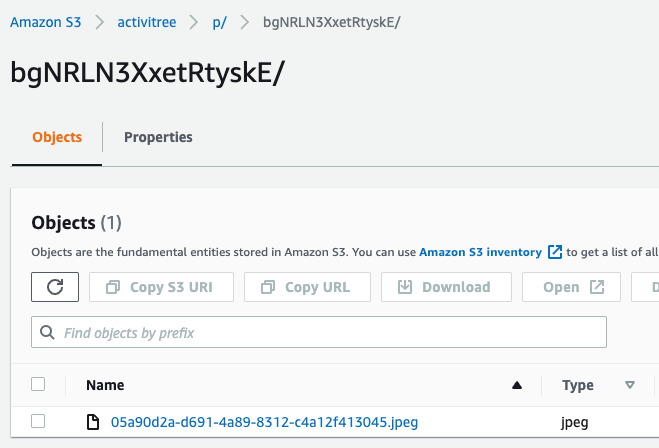

If you have a lot of files (tens of thousands) it is good to organize them in the bucket for faster accessibility. AWS has to search for them too. Let’s say, don’t do bucket/images/xxx.jpg and dump everything in there. It is better to use for instance the user’s id to create folders.

Check this example. You have the bucket name, ‘p’ for posts, the userId, and within that folder all images from posts made by that user.

You will have a url for your Cloudfront : https://xxxxx.something…com You associate this url with something like files or assets.yourapp.com and this becomes the Cloudfront root for your S3 bucket.

S3: https://your_bucket.s3.eu-central-1.amazonaws.com/p/bgNRLN3XxetRtyskE/05a90d2a-d691-4a89-8312-c4a12f413045.jpeg

CDN: https://assets.yourapp.com/p/bgNRLN3XxetRtyskE/05a90d2a-d691-4a89-8312-c4a12f413045.jpeg

If you create that root as a global variable you can then access it all over the app like

url={`${IMAGES}/p/bgNRLN3XxetRtyskE/05a90d2a-d691-4a89-8312-c4a12f413045.jpeg`} or

url={`${IMAGES}/p/${userId}/${imageUrl}.jpeg`}

Thank you so much.

I’ll try to see how to set this up then.

last question, just for s3 my cors were ok then ?

They are “open” to everything. Other websites may link your images with no issues.

You need to make sure you send back to the user a ‘Access-Control-Allow-Origin’ header to prevent others from linking to your assets.

This header is necessary also if you fetch the image, for instance you need that image on a canvas for editing. When you fetch … you will get a cross origin error because the response is missing the header.

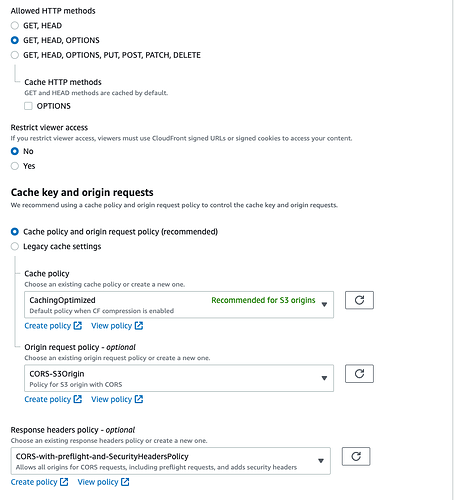

So, you specify the headers in S3 and set Cloudfront to pass the headers from/of the origin (the origin in Cloudfront is your S3).

I guess I am not anymore at one stupid question, what should be there in both case for the ‘xxxxxx’. ? The access key ID ? My problem with AWS is that it’s so big I don’t know where to find simple example of working set up… yours seems perfect, would be nice to document it

Let this be created by your Cloudfront. Start with a blank and let Cloudfront write its policy there (images above). Then you can add the rest to the policy.

I got this policy created by Cloudfront (but you have a second one, (+ your local one which is not needed for me) dunno how you got it created):

{

"Version": "2008-10-17",

"Statement": [

{

"Sid": "1",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::cloudfront:user/CloudFront Origin Access Identity xxxxx"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::xxxxx/*"

}

]

}

and updated the CORS as you mentioned with the relevant allow access origin for my prod environment.

Some remarks:

- I can still access the images from localhost or directly using the cloudfront link. You mentioned access should be limited to only the website I precise in the CORS policy.

- However I can’t upload anymore to the website from localhost, only from prod environment, so that’s one extra security (which is not the most practical but we’ll manage with a different bucket for development anyway