Absolutely, @ramez did you get a tutorial up anywhere?

Has this tutorial ever been published? I am trying to get mup-redis run along with mup-aws-beanstalk, but to no avail. Seems if they are not compatible with each other. So maybe I find a way to set this up manually?

I think they are incompatible by concept.

What I understand is that you would only deploy Meteor to EBS if you are looking for the autoscaling but you cannot autoscale redis. I think you cannot even include MongoDB with the mup-aws-beanstalk plugin because you don’t want to start copies of MongoDB (without data) every time a new machine starts up and then delete your Mongo when the farm is scaling down. Same for Redis.

You would most probably run your Redis server(s) in the same VPC and connect to it from your EBS EC2s.

The initiator of this conversation seems to have explained it in Github: Will it work with multiple instances of redis? · Issue #16 · ramezrafla/redis-oplog · GitHub

Thanks for your response. I did not intend to scale Redis. My understanding is that Redis is performant by design. So I was guessing if I ran Redis on a separate server than the EBS cluster, it should be doable.

But it seems as if mup-redis doesn’t support this. If I try to setup things this way, I get a message that mup-redis won’t support multiple servers, although I only configured one for it. It seems to look at the EBS config as well.

I think mup-redis deploys a Meteor server with a redis running in the same container. But you don’t need another Meteor. Your Meteor server(s) will be in the EBS. I feel what you need is just a manual install of a redis server in the same VPC. Maybe something like AWS Marketplace: Redis and pick up any extra configurations from the mup-redis repo.

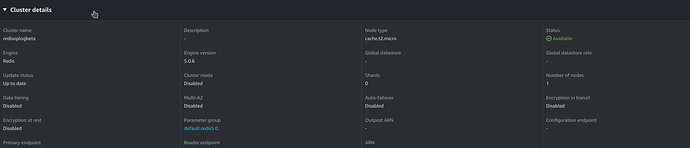

What we have done is to set up an Elasticache single instance, which is fully compatible with redis-oplog, connecting directly to our EBS deployments. With a micro instance, it has handled 1k simultaneous users without any issues. You could always scale it vertically if needed, but since it does so minimal work, you get really far on a micro instance.

That’s what I tried to do. But how would I tell mup-redis that it should use this existing Redis instance? It says it won’t work with multiple servers. Ok. Maybe I could configure redis-oplog directly.

Do you know any documentation / tutorials out there on how to hook things up? I was hoping I could still use mup instead of rolling everything on my own.

I am not aware of one, but setting up an elasticache redis instance is pretty easy. I use mup-aws-beanstalk to set up my application environment, and just set up elasticache separately per environment, pointing my app towards that address. So I have a prod-cache and staging-cache instance.

I have this section in my Meteor.settings

"redisOplog": {

"redis": {

"port": 6379,

"host": "xxxxxxxx.cache.amazonaws.com"

}

},

Ok, thanks, will try this approach.

BTW: I read in another thread here that Meteor does scale much better vertically (by using a more powerful EC2 instance) instead of horizontally (with EBS), even if connected to Redis. I would be curious about your experience in this respect?

I was hoping I could keep costs a bit down by implementing EBS, and also I need “zero downtime”.

The issue with scaling horizontally, is that while a stronger CPU is nice, the scale comes from multiple CPUs, which Meteor/node can not avail itself of easily. There are clustering / multi-cpu packages out there, but not one that I am sure is compatible with this EBS setup. So I find it simpler to find an appropriate sized container for my memory footprint, and scale out horizontally from there. This gives you the benefit of better reliability as well, as you have multiple instances to load balance over.

There are packages out there like GitHub - nathanschwarz/meteor-cluster: worker pool for meteor using node js native `cluster` module though, so that might be worth looking into with bigger instances.

Zero downtime is also working nicely for us. We have a small react component that uses the Reload._onMigrate, to notify the user of a new client version, which they can choose to refresh to get to. Otherwise they are not interrupted.