“Meteor CI” - Getting Started with Meteor Continuous Deployment

At first glance this looks incredibly useful, thank you very much for taking the time to do it.

Something I have been looking as well. Thanks for this tutorial

Thanks guys!

I am very eager to get feedback from people who actually go through the tutorial from beginning to end.

Who knows. Maybe I’ve raised the bar on what a tutorial should be?

If you do get some good benefit out of it, I’d be very grateful if you’d promote it in your social media channels. It started out as a fairly modest thing but grew into a time eating monster as I tried to make it complete, coherent and consistent. So, I’d like to reach the largest audience possible to make it worth the time I put into it.

This is simply superb, Martin. A+ Will definitely be updating Clinical Meteor, the Meteor Cookbook, and StarryNight to point to this. And will definitely promote along social media and business channels.

A few comments:

- fantastic choice to start with the Joel Test

- great focus on Continuous Deployment as a theme to pull together all the different testing pieces

- diagrams and flow charts are much appreciated

- really appreciate that you covered the missing piece of production logging

- jsdoc and linting pipelines are fantastic

- super excited to see video tutorials on using Nightwatch

The one thing I have mixed thoughts about is the docker images, and building Continuous Deployment using Infrastructure-as-a-Service versus Platform-as-a-Service. That is, the tutorial is written for IaaS such as AWS, Digital Ocean, Rackspace, etc. However, a lot of people want to deploy to Galaxy, Modulus, Heroku, etc; and so all the docker image stuff isn’t applicable to them. It would be really great to have an IaaS version of the tutorial and a PaaS version someday.

Also, the TinyTest portion is probably the one area that’s not future proof, and will get replaced by Mocha, Gagarin, Chimp, etc. So might want to think on how to add additional unit/integration test runners. Shouldn’t be too hard I don’t think.

Dear Abigail,

I really value your input, as always! Thanks, so much.

It does concern me that many pieces of the tutorial are already close to obsolete. The number of them seemed to grow even as I was doing the thing.  Eg, the whole “synchronous wrapper” question dies with 1.3, apparently.

Eg, the whole “synchronous wrapper” question dies with 1.3, apparently.

The docker image has no other purpose than a way to isolate a deterministic environment for the tutorial. It does not actually have anything to do with deployment. I don’t have the resources to make sure the tutorial will work in RedHat or Suse, etc, let alone Apple or Microsoft. So the docker image is for containing a Xubuntu machine long enough to do the tutorial and keep little “dehydrated” versions of it available for future tinkering.

The end result of the tutorial is a “meteor deploy” command run from CircleCI, which is what you want, I think.

My target audience is all the younger developers with a brilliant idea who end up crashin’n’ burnin’ from “maintenance drag” from losing control over documentation, linting, logging and end-to.end testing. When your desk is a plank on a couple of cardboard boxes, wracking your brain over how to integrate all that, instead of perfecting your killer app, is a hard choice not often made. My idea was to offer a pre-built solution showing how each of the elements fit together, in a simple enough structure that individual bits can be swapped out for different ones as desired, in the context of something already known to work

I’d love to know that I saved a few “skunk works” from stumbling over their own swarf.

Since regards,

Martin

This tutorial is awesome! Something that you’re not getting for free usually

I look forward to implementing this. I started looking at the contents and watch the first video, which did confuse me a little.

It seems like you have an Ubuntu virtual machine running a Docker image of Xubuntu.

I thought Docker was basically a virtual machine, so why do you have a virtual machine inside a virtual machine?

Also, I thought Docker simply acted as the server. So if that’s the case, why do we need a graphical image instead of just Ubuntu server edition?

Merlin,

Actually, no. Docker is not a virtual machine. It sets up read-only layers on your real operating system and virtualizes anything your container could try to write to.

So the xubuntu-x2go-phusion Docker image allows you to create one or more containers holding virtualized Xubuntu environments within your OS, that will use everything they can from the base OS, but will install, virtually, anything special they need. As long as Docker behaves as it should, its containers cannot wreak any harm on the base machine. You then need the X2Go Client on your base machine, in order get a GUI to work with.

Xubuntu is simply a minimalist version of Ubuntu Desktop.

The tutorial has very many moving parts. I did not want to spend my life resolving installation version conflicts on other people’s machines, so I decided to create a Docker image with a fully prepared environment ready to go. That way, I can quickly find the cause if something misbehaves, and avoid putting other people’s machines at risk.

You’ll be one of the first to use the tutorial so please be aggressive in alerting me of anything that should be improved, clarified or simplified.

Thanks very very much for getting in touch.

Martin

I didn’t dig into this two deep but what’s difference between unit and functional tests; I mean aren’t tests run in the same pass regardless of the type (integration, unit, functional, etc)?

Good stuff though, I’ll dig in deeper later.

Unit tests are technology specific, whereas functional tests are browser specific and work at the business level.

For instance, a ‘helloworld’ functional tests (aka acceptance tests, validation tests, end-to-end, etc) could run successfully on a hello world page generated by Spark, Blaze, React, .Net ASP, To,cat, Apache, etc.

Unit tests rely on the underlying language and library, however. Unit tests for React won’t work for Spark, or ASP, Tomcat, etc.

So functional tests are useful for black-box testing, and walking an app across different technology implementations (ex. I have one app that I’ve walked through maybe 6 languages now). Unit tests are for white-box testing, and looking at the innards of an app.

Yet another way to describe it… crash test dummy’s are functional testing… simulating a user’s experience; whereas an engine diagnostics computer is unit testing and looking at the engine internals.

I look at it this way.

Unit tests are far too fine grained to interest your end users. Contrariwise, they are very concerned that they get the functionality they depend on.

So your developers use unit tests to prove to themselves that they are building up functionality on solid foundations, and (in part) to document how each unit should behave. You want those tests in your CI script as redundant sources of error messages should functional tests croak.

Finally, your functional tests prove to your end users that their needs are understood and under development or complete.

@robertlowe did you mean why are unit tests and functional tests treated separately (i.e. why are the separate stages in the process / different blocks in the diagrams) rather than what’s the definition of unit/functional tests?

Yea, treated separately — why in the state diagram are they at different levels. Testing could be a single pass. I’m unsure why it’s two-step, or what the reasoning is for that.

Package publication and build pipelining, primarily. In a larger shop, there might even be a third or fourth testing round that includes device testing or load/stress testing.

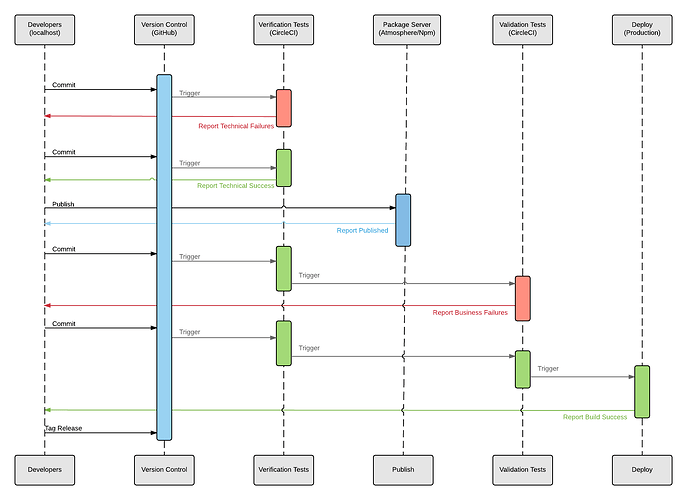

Martin’s network state diagram is so good that I went and made a version of it for release-track authors, or anybody who’s building package-only microservce apps that share packages. (‘Verification’ and ‘Validation’ are just government-speak for unit testing and functional testing, btw.)

See how the package publication process is between unit testing and functional testing? If all your packages are within a single app and there’s no publishing/sharing, then you can bypass that step, and it’s effectively done in a single pass like you describe.

Sorry Robert I misunderstood the point of your question.

With any kind of diagram you have to have some sort of strategy about granularity. You can show every imaginable detail, but often you end up communicating less.

My strategy in the diagram was entirely mercenary: highlight the steps that the tutorial covers and pretend the others don’t exist.

The great quest of DevOps is to grow from manual build and test to continuous integration, from there to continuous delivery and finally to the ‘holy grail’ of continuous deployment. Ok, so, that last step implies a such huge confidence in testing procedures that no human eyes verify anything before deployment, which is most likely an asymptote to what really happens. That sequence diagram does not show code review boards, or test review boards or many of the other things that have to happen in serious projects.

Nevertheless, I hope I have provided a “getting started” kit that has everything you need for continuous deployment, even though it may only ever continuously deploy to a pre-production server.

Yeah Abigail,

I once worked on an IV & V team contracted for a NASA commissioned remote-sensing instrument. Our entire Independent Verification and Validation task was to try to prove that the prime contractor’s work was no good. (I found they were trying to do a C language divide during a non-maskable interrupt  )

)

Then our CEO gets up at a conference and says " … and you can be confident that our 4 & 5 team is doing a great job checking everything works right".

Can you explain why you instantiate the docker image the way you do?

What is HOST_NUM for? Why not just have the --name and --hostname be the same?

Why is the output sent to a file, where the name is from CNT_PID?

docker run -d

–name ${CONTAINER}

–hostname=${HOST_NAME}

-e SSH_KEYS="$(cat ~/.ssh/id_rsa.pub)"

-e USER_PWRD=${USER_PWRD}

-e SSH_USER="$(whoami)"

“${IMAGE}” > “${CNT_PID}”;

Hi Merlin,

What is HOST_NUM for?

You can use HOST_NUM to distinguish between different containers instantiated from the same image.

Why not just have the --name and --hostname be the same?

After having been tripped up multiple times, I’ve come to fear other people’s code ( and tutorials! ), where different things are named the same. It’s become a habit of mine to just never do that. In code with strong typing it is less important, but in scripting, ensuring every unique thing has a unique name is an important defensive programming habit.

Why is the output sent to a file, where the name is from CNT_PID?

If I recall correctly, I tried to catch the PID in a variable and gave up. It’s either too complicated or impossible to do it. Stuffing it in a file also means you have it there for future use.

Hoping this helps,

Martin