I look forward to implementing this. I started looking at the contents and watch the first video, which did confuse me a little.

It seems like you have an Ubuntu virtual machine running a Docker image of Xubuntu.

I thought Docker was basically a virtual machine, so why do you have a virtual machine inside a virtual machine?

Also, I thought Docker simply acted as the server. So if that’s the case, why do we need a graphical image instead of just Ubuntu server edition?

Merlin,

Actually, no. Docker is not a virtual machine. It sets up read-only layers on your real operating system and virtualizes anything your container could try to write to.

So the xubuntu-x2go-phusion Docker image allows you to create one or more containers holding virtualized Xubuntu environments within your OS, that will use everything they can from the base OS, but will install, virtually, anything special they need. As long as Docker behaves as it should, its containers cannot wreak any harm on the base machine. You then need the X2Go Client on your base machine, in order get a GUI to work with.

Xubuntu is simply a minimalist version of Ubuntu Desktop.

The tutorial has very many moving parts. I did not want to spend my life resolving installation version conflicts on other people’s machines, so I decided to create a Docker image with a fully prepared environment ready to go. That way, I can quickly find the cause if something misbehaves, and avoid putting other people’s machines at risk.

You’ll be one of the first to use the tutorial so please be aggressive in alerting me of anything that should be improved, clarified or simplified.

Thanks very very much for getting in touch.

Martin

I didn’t dig into this two deep but what’s difference between unit and functional tests; I mean aren’t tests run in the same pass regardless of the type (integration, unit, functional, etc)?

Good stuff though, I’ll dig in deeper later.

Unit tests are technology specific, whereas functional tests are browser specific and work at the business level.

For instance, a ‘helloworld’ functional tests (aka acceptance tests, validation tests, end-to-end, etc) could run successfully on a hello world page generated by Spark, Blaze, React, .Net ASP, To,cat, Apache, etc.

Unit tests rely on the underlying language and library, however. Unit tests for React won’t work for Spark, or ASP, Tomcat, etc.

So functional tests are useful for black-box testing, and walking an app across different technology implementations (ex. I have one app that I’ve walked through maybe 6 languages now). Unit tests are for white-box testing, and looking at the innards of an app.

Yet another way to describe it… crash test dummy’s are functional testing… simulating a user’s experience; whereas an engine diagnostics computer is unit testing and looking at the engine internals.

3 Likes

I look at it this way.

Unit tests are far too fine grained to interest your end users. Contrariwise, they are very concerned that they get the functionality they depend on.

So your developers use unit tests to prove to themselves that they are building up functionality on solid foundations, and (in part) to document how each unit should behave. You want those tests in your CI script as redundant sources of error messages should functional tests croak.

Finally, your functional tests prove to your end users that their needs are understood and under development or complete.

1 Like

@robertlowe did you mean why are unit tests and functional tests treated separately (i.e. why are the separate stages in the process / different blocks in the diagrams) rather than what’s the definition of unit/functional tests?

Yea, treated separately — why in the state diagram are they at different levels. Testing could be a single pass. I’m unsure why it’s two-step, or what the reasoning is for that.

Package publication and build pipelining, primarily. In a larger shop, there might even be a third or fourth testing round that includes device testing or load/stress testing.

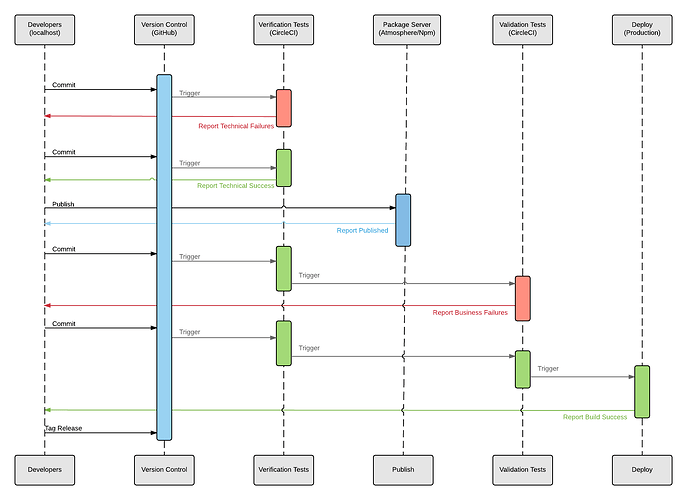

Martin’s network state diagram is so good that I went and made a version of it for release-track authors, or anybody who’s building package-only microservce apps that share packages. (‘Verification’ and ‘Validation’ are just government-speak for unit testing and functional testing, btw.)

See how the package publication process is between unit testing and functional testing? If all your packages are within a single app and there’s no publishing/sharing, then you can bypass that step, and it’s effectively done in a single pass like you describe.

2 Likes

@robertlowe

Sorry Robert I misunderstood the point of your question.

With any kind of diagram you have to have some sort of strategy about granularity. You can show every imaginable detail, but often you end up communicating less.

My strategy in the diagram was entirely mercenary: highlight the steps that the tutorial covers and pretend the others don’t exist.

The great quest of DevOps is to grow from manual build and test to continuous integration, from there to continuous delivery and finally to the ‘holy grail’ of continuous deployment. Ok, so, that last step implies a such huge confidence in testing procedures that no human eyes verify anything before deployment, which is most likely an asymptote to what really happens. That sequence diagram does not show code review boards, or test review boards or many of the other things that have to happen in serious projects.

Nevertheless, I hope I have provided a “getting started” kit that has everything you need for continuous deployment, even though it may only ever continuously deploy to a pre-production server.

1 Like

Yeah Abigail,

I once worked on an IV & V team contracted for a NASA commissioned remote-sensing instrument. Our entire Independent Verification and Validation task was to try to prove that the prime contractor’s work was no good. (I found they were trying to do a C language divide during a non-maskable interrupt  )

)

Then our CEO gets up at a conference and says " … and you can be confident that our 4 & 5 team is doing a great job checking everything works right".

3 Likes

Can you explain why you instantiate the docker image the way you do?

What is HOST_NUM for? Why not just have the --name and --hostname be the same?

Why is the output sent to a file, where the name is from CNT_PID?

docker run -d

–name ${CONTAINER}

–hostname=${HOST_NAME}

-e SSH_KEYS="$(cat ~/.ssh/id_rsa.pub)"

-e USER_PWRD=${USER_PWRD}

-e SSH_USER="$(whoami)"

“${IMAGE}” > “${CNT_PID}”;

@merlinpatt

Hi Merlin,

What is HOST_NUM for?

You can use HOST_NUM to distinguish between different containers instantiated from the same image.

Why not just have the --name and --hostname be the same?

After having been tripped up multiple times, I’ve come to fear other people’s code ( and tutorials! ), where different things are named the same. It’s become a habit of mine to just never do that. In code with strong typing it is less important, but in scripting, ensuring every unique thing has a unique name is an important defensive programming habit.

Why is the output sent to a file, where the name is from CNT_PID?

If I recall correctly, I tried to catch the PID in a variable and gave up. It’s either too complicated or impossible to do it. Stuffing it in a file also means you have it there for future use.

Hoping this helps,

Martin

Amazing, really good tutorial!!

Do you know if sometimes changed with new versions? Could I integrate this with my current CI on Gitlab using pipelines?

I’ll try to do it later on with 1.4.2 and Digital Ocean

Thank you for your very kind words!

I’m sorry to say that large chunks of it are obsolete. The switch from Meteor 1.2 to 1.3 was just too great and happened when I was 98% done, having spent waaay too much time on it

There’s plenty of useful fragments, but as a whole it needs a complete overhaul to get it to work again.

Meanwhile, I’m working on HabitatForMeteor and Meteor Mantra Kickstarter which will form the underlying projects of the next version of Meteor CI Tutorial, if I can ever get back to it.

I’d offer them to you as an alternative, but they are both in an indeterminate state at the moment.

At least until someone helps me get past this aggravation, How to debug failing apk --> host connection?

1 Like

wow, nice job behind that. I’d be really glad to help but unfortunately my devops skills are useless : (

What you’re doing with HabitatForMeteor is similar to Mup, right? I tried it but like others tutorials related to Meteor deployment I get stuck at some point of them.

What I’ve achieved at the moment is create some jobs using the Gitlab pipelines and on this process run some tests, clean up my project, create a Docker image and push it to Docker Hub. I’d need the next step: download either the new code from my hosting (Ubuntu - DigitalOcean) or just download the Docker image from Docker Hub and restart the project.

But this would happens when I push to master, I didn’t like mup cause I needed to push and deploy separately. That’s ok for a CI but not for a CD (more info here: https://about.gitlab.com/2016/08/05/continuous-integration-delivery-and-deployment-with-gitlab/). I’d like to have a whole automatic after push to be deployed.

It’s a shame because Meteor is a pretty nice framework but this process, the most important one, makes Meteor ugly and a complicating tech.

Yeah, I don’t know if Mup can be seen as a DevOps tool; I see it more as a stop gap measure between the plush space of Galaxy and doing it all by hand.

With Habitat For Meteor, I’m trying to create something that really can serve in a DevOps shop, but it’s a lot more work than I expected, and I’m not happy with it. In particular, I haven’t built it into CI the way you ( and I ) would like.

Well, I’m guessing Galaxy is too important an income center for MDG to want to hand out the tools to easily avoid it. I support that; I’d hate to see them run out money.

The problem with Galaxy is the pricing. Probably the best option to deploy a Meteor app but not definitely the cheapest one

What makes Galaxy “the best”? Benefits of Heroku include:

- automatic deploy on push to git repo

- pipelines for automatic testing and one-click promotion to production

- rollback to previous deploy with the click of a button

- two-factor authentication

- good docs

- hobby apps for just $7 monthly

You can do all of that using Gitlab CI

1 Like

Sure but does using gitlab ci make galaxy more competitive? You could use gitlab CI with Heroku too. Or you could use Codeship too or you can roll your own CI, but I think the way these these features are integrated in Heroku makes it more attractive than Galaxy.

)

)