which OS are you using? Centos or ubuntu? Which kernel?

Ubuntu

Kernel info: Linux Draft 3.13.0-24-generic #46-Ubuntu SMP Thu Apr 10 19:11:08 UTC 2014 x86_64 x86_64 x86_64 GNU/Linux

Ubuntu 14.04?

The CPU is too damn weak. You are running at a 5 minute average of 94% of load. If you want I can host you on my cloud and see what happens. I have a server almost fully empty, and I’m writing a tutorial on this so I’m interested in troubleshooting real cases.

Anyhow here the options are 2:

-

There is some bad logic which causes CPU spikes. Check where. Most probably making it asynchronous would help.

-

It’s a memory starvation issue. CPU usually spikes when there’s no memory left, so garbage collector runs like crazy.

The funny thing? it might not be your fault, but someone else VPS is starving the IO.

Try to run htop and iotop during spikes, so you can see what’s bigger, the IO queue or the CPU queue. Don’t believe to memory usage only, as it can’t tell the full story.

If it’s the CPU queue, then it’s your code fault. If it’s the IO queue, then it’s the server fault.

Yeah, Ubuntu 14.04 x64.

No idea what logic in the app causes the spikes. Haven’t worked it out with Kadira and I am trying to use unblock on method calls and even publications using the meteorhacks:unblock package.

The load on the server gets big when a lot of people are visiting it.

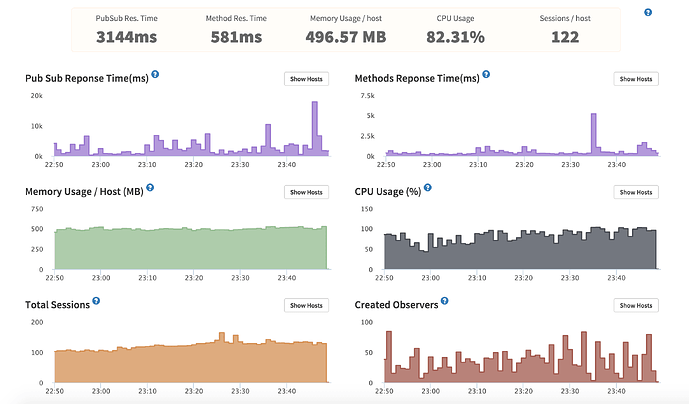

This is the bad current situation:

changing server looks like a viable option. 20 second of latencys for a pub sub is TOO much. Something funny is going on.

The massive latency only happens when my CPU hits 100%.

Wouldn’t just deploying more instances solve all the problems?

unfortunately it’s not a guarantee. If the MongoDB is making you wait, or there’s some poor code, even 100 instances will still lag.

It is heavy load at the moment. Getting 300 signups a day and certain times of day it’s obviously a lot busier than others. And the week before was averaging 180 a day.

Are you running the SEO package with PhantomJS? I’ve heard of that bogging down the servers to the point of crashing when Google starts indexing.

Using spiderable and phantomJS.

The load on the server is definitely coming from heavy traffic that I’m just not able to deal with. When the most users are online the 100% CPU happens. Now it’s late for my users so not a lot online again, so the server is fine for now.

are you using clusters for mult core support?

I think it’s hard to tell without seeing publications and methods code. Maybe you are doing batch inserts, doing a blocking operation and so on.

Thanks for all the comments so far.

This is what I’ve gone and done and seems like the server is coping the stress much better now. I’ll see in a few hours if things really worked well.

I upgraded the server to a 2GM RAM 2 core DO instance. That by itself did nothing for performance since Meteor won’t make use of the second core.

What I did next was:

1 - added MongoDB indexes for all publications. I had almost no indexes before this (apart from the standard _id indexes).

2 - improved publications code. I make use of the publish-composite package and I was using this.ready in it which I don’t think is supposed to be used with that package (although things were working before so maybe I’m wrong).

3 - this is the big one: I refactored the code so that I could make use of multiple cores. There are certain tasks that happen a lot on the site. It’s a draft fantasy football game and a lot of timeouts are set on the site. This didn’t work well running on two instances with how the initial code was set up. After the refactor I made use of the meteorhacks:cluster package and did the timeout tasks on one only one of the instances. The traffic is router to both instances though.

4 - I also replaced the mizzao:user-status package and switched it with the The trusted source for JavaScript packages, Meteor.js resources and tools | Atmosphere package since user-status apparently doesn’t work with multiple instances yet. (I’m not really sure how the mizzao package is so popular if it doesn’t support multiple instances. Maybe I misunderstood something or it’s only used in small apps).

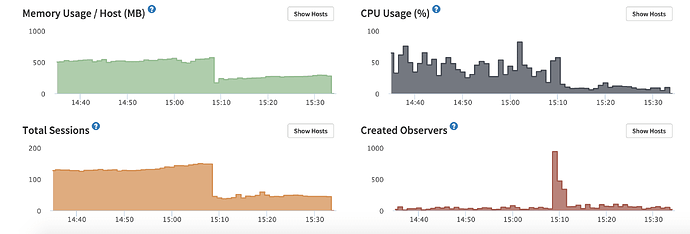

This is what the Kadira graphs now looks like. You can see the switch over in the middle:

Happy to hear people’s thoughts on any of the above.

I ended up writing a post on this here:

Thanks for the help