I’ve recently hit a fork in the road with my production app on how to handle a heavy Mongo data-crunching task. Here’s the problem:

I have thousands of client users across many server containers who all need the exact same bundle of heavily processed and compiled data from Mongo. Think players in a game all getting the final results of a series of many games, that are sorted, reduced, averaged in many different ways, etc. etc. And the result is a heavily processed Object of data that the client needs to consume.

There’s three approaches in Meteor to this problem (that I know) of and I’m curious what the community may think is the best approach.

1. Do the Processing In Each App Container (Initial Approach)

You let each container query all the needed Mongo data and do the processing/compiling in app container CPU and memory. Basically “aggregate” manually on the server in javascript. It’s then stored in a temporary cache for all the clients to Meteor Method call once they’re messaged that it’s ready.

I’ve read that this method is preferred because app container CPU and memory is cheaper (I assume financially) than database CPU and memory so it’s better to aggregate manually in app code. However, it’s slower than a true database aggregation.

Then a major downside is each app container is processing the data which is overkill if every client needs the same data and it bogs down the client app performance when this processing runs.

2. Use a MongoDB Aggregation (My Current Solution)

I was previously using the above method until I discovered Mongo aggregations! My JS compilation code ported to aggregation stages quite well. Even better, the Mongo aggregation benchmarked much faster than the JS server code in development. Great, right? Wrong, especially in production.

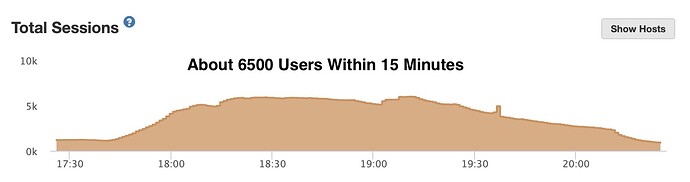

What I realized is now every app container will trigger the same Mongo aggregation which slams Mongo’s CPU, thus locking up my entire app, needlessly, as it performs the exact same aggregation of data X number of times at the exact same time. Super yikes. Major oversight on my part.

I read something about calling Mongo aggregations on the slave versus the master in order to free up the master CPU, so this could help. But there’s definitely no point in having Mongo run the same aggregation so many times when the same finished set of data can be shared to all the app containers. As far as I know and have researched, there’s no built in Mongo query/aggregation cache where it will serve up the same cached data if the aggregation/query params are the same.

3. Microservice Processing

This seems like the logical option at this point. As one microservice container could still use the faster Mongo aggregation code, but only call it once for each set of clients that need it. Then it would message the processed data over to each client server, who then sends it to each client.

What I worry about is scaling. If there’s a single server that’s processing all the data, it could bottleneck. Vertical scaling sounds good to a point, but then what? Horizontal scaling would only help if I wrote some kind of router that passes different processing requests to different containers of the same service somehow. Which is feasible, but will involve some complexity to get each container of that service to have a unique tag that the main app container code knows how to round-robin to.

Ultimately I may still run into an issue of hitting Mongo with too many aggregations and slowing down performance for the rest of the app. So it might make sense to use app container processing within the microservice which would likely scale better.

It kinda sucked to get really excited over aggregations (and do all the work to start using them) to only find out they have heavy drawbacks and consequences. I didn’t think it would be as taxing to Mongo as it is. And overall, they’re fairly simple, just a lot of data.

Yet another approach is go the route of heavy denormalization of the data to invoke less (or no) aggregation in real-time, but this is a development hassle and managing all that denormalization is a pain.

Any thoughts on all this?