@philippeoliveira Just flagging that this issue is happening again today.

Hello @brianlukoff We are still investigating this.

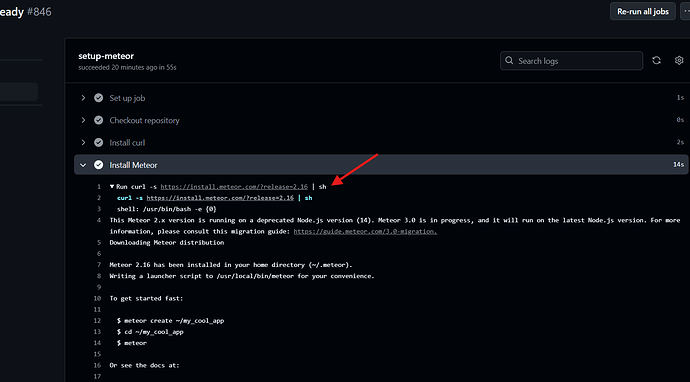

To improve testing, we have a GitHub Actions task to try and catch the same error, but we haven’t been able to reproduce it yet. Since last week, there have been hundreds of executions, without a single error.

We have a task similar to this.

name: Meteor Setup and Ready

on:

schedule:

- cron: '*/10 * * * *'

workflow_dispatch:

jobs:

setup-meteor:

runs-on: ubuntu-24.04

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Install curl

run: sudo apt-get install -y curl

- name: Install Meteor

run: curl https://install.meteor.com | sh

- name: Meteor Get Ready

run: meteor --get-ready`

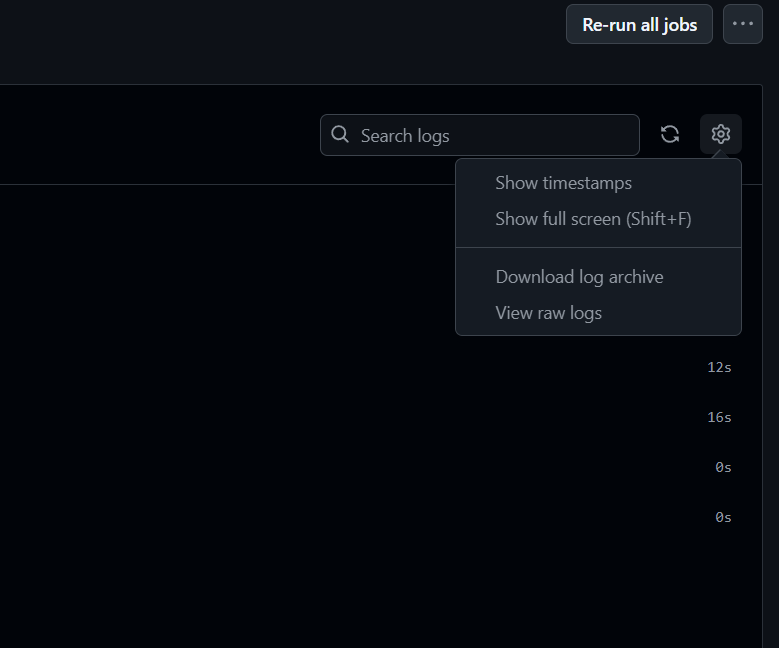

Could you send me the Download log archive of your GitHub Actions to support@meteor.com.

Just sent – thanks!

Hey @philippeoliveira,

Can you explain the optimizations applied to Troposphere?

It was stable for years, but now it occasionally produces errors.

One of our clients reported a build failure due to an error 522 on warehouse.meteor.com (Troposphere):

mz4s8 09/16 12:28:05.004 AM e[0me[91mError: Could not get https://warehouse.meteor.com/builds/Jy8cb3Wz9JwDNu68u/1721324548529/WxT58FawTY/less-4.1.1-os+web.browser+web.browser.legacy+web.cordova.tgz; server returned [522]

Since packages are crucial for the Meteor ecosystem, it would be helpful to understand the changes you’re making to Troposphere.

Hello @filipenevola,

We made improvements last month to the Troposphere databases and some resource-level changes to the container infrastructure.

Regarding this error 522 that you sent below, I think it could be something else. Since warehouse.meteor.com is a CDN system that consumes from S3 (only static files.)

DNS > CDN > S3

This part has no connection to the changes we made to the Troposphere infrastructure.

I think this might be another problem and this was the first time I saw this error here. We will look into this too and give you feedback.

Yeah, I still remember that hehe.

I asked because if you made code changes to Troposphere, it could generate an invalid link or something similar.

S3/CDN problems only happened in the past with connections from China; I don’t recall any other issues.

The build worked for this client on the second attempt.

If this issue keeps happening, maybe the Meteor tool could retry instead of failing.

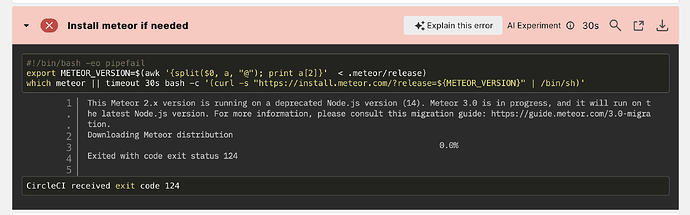

We have had failing builds all day now caused by meteor installation timing out.

If there’s any escalation button to press, please press it ![]()

Yep, this form of retry seems like a good idea, I’ll take it to our team.

I’ve been doing some tests and for some reason in certain regions of France and Eastern Europe they also fail from time to time.

I will investigate this further, perhaps somehow certain IPs may be on an AWS blacklist or there may also be a network problem temporarily.

We are on circleci btw

update:

the file that fails most often for us is the dev bundle that the install script tries to download:

TARBALL_URL="https://static.meteor.com/packages-bootstrap/${RELEASE}/meteor-bootstrap-${PLATFORM}.tar.gz"

We also have the same issues in the Gitlab CI (running in AWS eu-west-1 Ireland).

-

curl -sSL "https://static.meteor.com/dev-bundle-node-os/v14.21.4/node-v14.21.4-linux-x64.tar.gz"fails for every second attempt -

curl -s https://install.meteor.com/?release=2.16 | shfails always -

Atmosphere packages fail occasionally

Error: Could not get https://warehouse.meteor.com/builds/fi77h3cJ7bda25ALS/1521806103603/65g5Cvzhg4/hwillson:stub-collections-1.0.7-os+web.browser+web.cordova.tgz; server returned [522]

Earlier today, we carried out maintenance on our CDN system with the goal of enhancing performance and eliminating recurring issues.

We implemented improvements and adjustments to the CDN configurations and caching system, and we are closely monitoring the results.

We are currently running a continuous test, with over 400 executions of Meteor installations so far, and none have failed. To further improve our monitoring system, we will activate self hosted runners in various locations around the world to replicate the same testing routine.

We will continue to monitor progress and provide updates as needed.

Can you try doing new runs and let me know if you have the same problem?

I tried to run our pipeline 6 times.

curl -sSL "https://static.meteor.com/dev-bundle-node-os/v14.21.4/node-v14.21.4-linux-x64.tar.gzsucceeds now all the timecurl -s https://install.meteor.com/?release=2.16 | shfails always- Atmosphere packages I didn’t try yet

So there’s some improvement for sure.

I added a specific test for release 2.16.

We already have more than 800 executions and none have failed, we are suspecting that it would be something in certain regions or something with certain cloud runner providers.

We will activate advanced monitoring to cover several world regions and try to understand what is going on.

Best Regards,

Not sure if it helps but this keeps on happening for us today when installing on circleci docker-based jobs. We added a timeout of 30s to ensure we fail quickly. Some jobs have run fine today, this is quite spurious.

I’ll investigate this to see what I can find.

It started happening to us again today too.

We decoupled our builds from this flakiness by caching the meteor distribution and all packages across builds. Read more here:

@brianlukoff @permb @jussink Could you guys give me some additional information?

We’ve been trying to reproduce this issue unsuccessfully.

We think it has something to do with regions and the type of machine on which the script is being run.

Now, we want to try to find a way to reproduce this issue. @philippeoliveira will set up a Lambda to run this in multiple regions.

But to further help us understand what’s happening, could you guys provide the following information?

- Are you having issues ONLY with install.meteor.com, or do you still have issues with static.meteor.com and warehouse.meteor.com?

- Which region is your CI machine running from?

- Are you getting any errors, or is the issue only about the download time?

Thanks for digging into this!

We’re seeing the issue in two places:

- A GitHub action – I don’t know exactly where this is, but it looks like they host their runners in Azure

- Building Docker containers in ECS – the us-east-1 region in AWS

We’re not seeing errors, but instead things hang indefinitely at “Downloading Meteor distribution,” so we can run the script from install.meteor.com but we can’t actually download the distribution.