Update: Redis-Oplog 1.2.0 has been released

It contains a lot of improvements and stability fixes.

This is prod-ready  . But always QA-it like crazy out of it before deploying live so you won’t blame me if something bad happens

. But always QA-it like crazy out of it before deploying live so you won’t blame me if something bad happens

However it does not yet have the level of perfection and elegance I want to. So a lot of work still needs to be done. I have solid confirmation that this is indeed the right direction, and enables scalability of reactivity.

I discussed this morning with @mitar some stuff regarding his awesome package reactive-publish, and some other stuff regarding meteor’s internals, and I realized that by using redis-oplog, I kinda reinvented some wheels made by Meteor and I did not hook into the propper places, which led me to write custom code to offer support with other packages, and additional computation.

By hooking into those places, we should expect an even bigger performance increase.

However, I kept my promise and I created a small naive benchmarking tool. This is not a real-life scenario testing tool and it does not use any of the fine-tuning provided by RedisOplog, but it offers us some insight.

I only tested it locally, and the benchmark results are consistent:

- MongoDB oplog (on the local machine) is around 10%-20% faster in response times.

However in a prod environment with a remote db, I expect better results. Who has the curiosity and the time, can check it out, I will be very happy to assist, currently I want to switch my focus on what is trully important.

And this makes a lot of sense, because, not having a deep knowledge on how everything works in the back scenes, I had to reinvent some work done by MDG, which is less optimal.

Why it’s a bit slower:

- The additional overhead of sending correct data to redis.

- Having an additional store for data and performing changes on it.

- Some checks are performed twice like which changes to send out.

I realized that smaller throttle (faster subsequent writes to db) brings RedisOplog closer to MongoDB Oplog. Which is again, expected.

All of this things will be improved, I promise you that, and I’m starting to shift focus on performance, so all the changes I’m doing have great impact on speeds.

Again, stressing this out, this does not test the fine-tuned reactivity which is the crown jewel of this package. DB was on local server. And I did not have at least 50 active connections

Cheers.

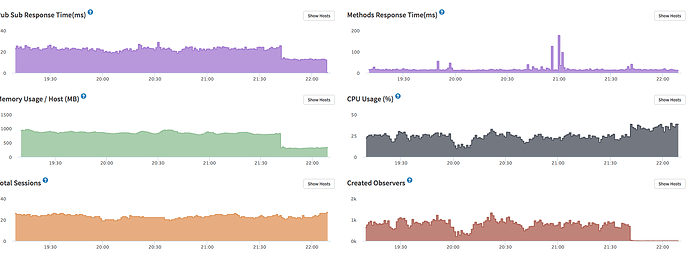

![]() On Kadira we can see, that the creation of observers went down to 3 per minute (before we had >800). CPU usage and method response time are also fine.

On Kadira we can see, that the creation of observers went down to 3 per minute (before we had >800). CPU usage and method response time are also fine.

or the “ready” event was being sent much slower.

or the “ready” event was being sent much slower.