There are no huge apps in the world… If your page is more than 1MB let’s say … the concept is probably wrong. To that 1MB you start grabbing fully optimized assets and libraries. BUT … if your assets are some … GRID FS in your Mongo, or if your Mongo is half a planet far from your Node or if your server pipeline is 30Mbps because it runs on a nano box in Amazon, the app might look like a huuuge monolithic old tech with no support …

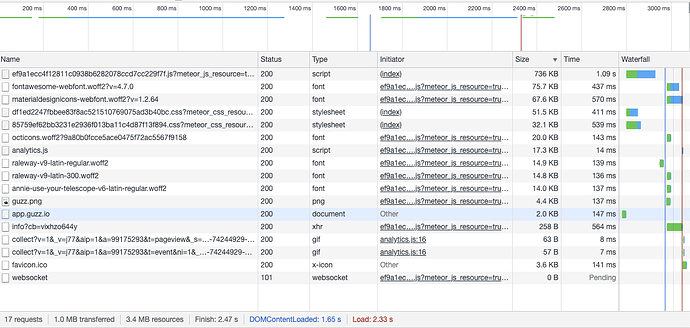

What does Chrome Network tab says about your loads? What is your mobile experience if you did it in Chrome (desktop) with the view set as let’s say an Iphone6?

What I noticed with React on mobile (I don’t use SSR), if you use Redux or any local data store and you load a component which has a lot of data in props (e.g. user wall of feeds), first the component will get ready and then the router will shift to the new route. I suggest you try to run your app ‘empty’ and see how it performs.

For me, on the first load (when I start pulling data from DB) of every page is lightning fast but then, it really depends on how much data I have in Redux (data used to display cards, user profiles etc). In React on mobile you really need to use virtualization or other technics for efficient painting if your DOM is “longer” than 2 screens and you should never load more than 2 screens on a first load. ‘2 screens’ does not sound too scientific but I think you know what I mean…

For the home page, if you use google analytics, or google maps or any global libraries, delay their start by as much as 3-5 seconds so that they load after the page load is complete.

Let me share with you an example of App.js I use to schedule assets or libs to I make sure they don’t affect the first paint or the first full page load:

class App extends Component {

componentDidMount () {

const { history, setShowIsometric, handleResizeWindow } = this.props

handleResizeWindow({ width: window.innerWidth, height: window.innerHeight })

window.addEventListener('resize', debounce(() => {

handleResizeWindow({ width: window.innerWidth, height: window.innerHeight })

}, 5000))

setTimeout(() => {

import('../../startup/client/libs')

setShowIsometric()

}, 400)

setTimeout(() => {

import('react-ga')

.then(ReactGA => {

ReactGA.initialize('...........')

ReactGA.set({ page: window.location.pathname })

ReactGA.pageview(window.location.pathname)

history.listen((location, action) => {

ReactGA.set({ page: location.pathname })

ReactGA.pageview(location.pathname)

})

})

import('react-redux-toastr/lib/css/react-redux-toastr.min.css')

}, 5000)

// TODO I might consider to only start maps if the user is logged in.

setTimeout(() => {

const GoogleMapsLoader = require('google-maps')

GoogleMapsLoader.LIBRARIES = ['places']

GoogleMapsLoader.KEY = '.........'

GoogleMapsLoader.VERSION = '3.35'

GoogleMapsLoader.load()

GoogleMapsLoader.onLoad(google => {

global.google = google

})

}, 6000)

window.addEventListener('DOMContentLoaded', handlePrerender())

}

Serving the Meteor bundle from a CDN is what I next consider to try. For some reasons all tests show an inefficient delivery of what is the biggest chunk of JS so I’d prefer to transfer that 2-3x more efficient … somehow.

With SSR, load a ‘template’ so that you have something to show and then … let the client pull data for it. I can assure you that even with complex queries (I use cultofcoders:grapher for things like posts of followers of friends) there are no performance issues. Node and React is fast enough for 2019. Chances that Meteor (Node) or React are slow are like 1 in a billion  … everything else is code and infrastructure design.

… everything else is code and infrastructure design.