Thanks for the great post! Very interesting.

Did you experiment with different settings for DDP rate limiter?

Thanks for the great post! Very interesting.

Did you experiment with different settings for DDP rate limiter?

Wow. Thanks for the really interesting and detailed post. Would be interesting to try add in the the pieces (db connection, pub/sub, methods) one by one and see which part degrades the performance. Would also be interesting to hear what MDG make of it.

Yes great post! Super interesting. One question for my understanding: When does the performance problem occur exactly, when A) 50 users hit your URL and they first browse your website, i.e. the initial page is requested for the first time or B) after they have already browsed your website and only when they hit a login-button to log into their accounts all at the same time?

This is awesome @evolross - thanks for digging into this so thoroughly! I have a feeling this issue isn’t Galaxy specific. Would you mind opening an meteor/meteor issue about this? An issue will help kick start the investigation process; let’s see what we can do on the Meteor side of things to help improve this.

Quick side note - Meteor 1.6 (Node 8) introduces several performance improvements that should help with this. It won’t fix everything, but it should help reduce your load stats. If you get a chance, it would be great to see how things behave when using the current Meteor 1.6 beta (1.6-beta.22).

Excellent write up.

Galaxy is fine for small apps that aren’t CPU hungry. But in my case of building an MMO, I was lighting their CPU’s on fire. I ended up having to self host, mostly due to cost.

Their cost is 2x as anyone.

HOWEVER it is important to know I did not have OpLog enabled, so there was no caching at all. I shit on Galaxy pretty hard in the past, but I may have just been an idiot. I’d love to re-deploy my app to their recommend setup (Galaxy + mLab) and see how I’m doing now. I bet it would run just fine.

I do plan to move back to Galaxy at some point once the $$$ starts flowing from my app.

Have you tried caching static files? For example the big JS file that Meteor has to load at the beggining. Using Cloudflare (with Page Rules, and make sure to test checking the HEADERS if it’s marked as “HIT”) you can automatically cache it, when a new deploy is made it automatically send the up-to-date version.

That way the galaxy instance would handle “only” the methods and pub/sub calls. Because otherwise, in every new user the galaxy instance has to send a massive JS file and all the package files.

I’m not sure if the gains in performance would be good enough or if that’s the bottleneck.

But one thing I’m sure the Compact version is just for “prototyping”. It has a very low ECU.

Does your login has custom validation or does it required a lot of computing to allow/deny access?

Cloudflare page rules example:

Concurrent users just clicking around your app aren’t intensive on the server. Users downloading your app payload for the first time, all at the same time is highly intensive on your server.

[EDIT]: Reading your other topic, I think the Cloudflare option maybe a good solution. You can use Argo (from Cloudflare too, to make it even faster).

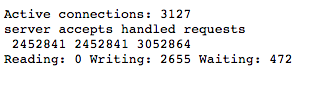

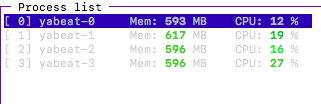

I’m also wondering why people do have a lot of these issues. We running http://www.yabeat.com on a single VPS for about 60 Euro per month (plus 50 Euro for the Mongo VPS), handling about 1.500 concurrent connections (using 4 vCPUs). We are also using the Pub/Sub system for every single user to provide the playlist feature. The only issue we had were bad MongoDB indexes which caused crashes and slow performance. After fixing the issues (f.e. we forgot that the order of the fields is very important), everything started working fine. Oplog is also enabled to provide reactivity through all instances.

We decided us explicit against Galaxy. We want to know what we get (f.e. speed of the CPU). In the case of yB, we are hosting on cloud VPS systems at OVH. We get high availability for a very fair price. Compared to the Galaxy pricing, this seemed more economically to us.

Rate limiter is against DDOS attacks. It will stop users for using the app too aggressively. Not something you ever want to affect regular user actions in the app.

How are you making use of the 4 cores? The site is impressively fast for the amount of traffic you get. Would love to learn more about the architecture

Galaxy is not very performant. One only has to look at the line in the docs where they recommend using the smallest instance size that can run one instance of your app. Seriously?

If one instance of a web/mobile app needs 1 full core and 2 GB of RAM to run…something is wrong with your app.

My suggestion is learn how to properly serve Node applications yourself on AWS, which is what MDG is doing. Just not super well.

Also, every app is different. And every version of every app is different. So much depends on your coders and their understanding of how computers compute.

I have my own secrets but I’m saving them for the Meteor hosting service that doesn’t suck that I hope to operate one day…

The responses these forums get sometimes… Wow.

Good luck with your secret hosting

We are using PM2 (not pm2-meteor) and starting 4 fork instances. Then we have NGINX which provides sticky sessions and distributes the traffic to the instances. To deploy our app, we’ve written a small bash script:

#!/usr/bin/env bash

server="user@www.server.com"

projectFolder="/var/app"

deployUser="user"

meteorServer="http://www.myapp.com"

rm -rf built

cd ..

meteor build .deploy/built --server "$meteorServer"

cd .deploy

mv built/*.tar.gz built/package.tar.gz

ssh "$server" -p 22 "sudo mkdir -p $projectFolder && sudo mkdir -p $projectFolder/upload && sudo mkdir -p $projectFolder/current && sudo chown -R $deployUser $projectFolder && exit"

scp -P 22 built/package.tar.gz pm2.config.js "$server":"$projectFolder/upload"

ssh "$server" -p 22 "cd $projectFolder/upload && rm -rf $projectFolder/current/* && tar xzf package.tar.gz -C $projectFolder/current && cp pm2.config.js $projectFolder/current && rm $projectFolder/upload/* && cd $projectFolder/current && cd bundle/programs/server && npm install --production && cd $projectFolder/current && sudo pm2 restart pm2.config.js && exit"

And the pm2.config file:

var appName = "app";

var appPath = "/var/app/current";

var rootURL = "www.myapp.com";

var settings = '{}';

module.exports = {

apps: [

{

"name": appName + "-0",

"cwd": appPath + "/bundle",

"script": "main.js",

"env": {

"HTTP_FORWARDED_COUNT": 1,

"ROOT_URL": rootURL,

"PORT": 3030,

"METEOR_SETTINGS": settings

}

},

{

"name": appName + "-1",

"cwd": appPath + "/bundle",

"script": "main.js",

"env": {

"HTTP_FORWARDED_COUNT": 1,

"ROOT_URL": rootURL,

"PORT": 3031,

"METEOR_SETTINGS": settings

}

},

{

"name": appName + "-2",

"cwd": appPath + "/bundle",

"script": "main.js",

"env": {

"HTTP_FORWARDED_COUNT": 1,

"ROOT_URL": rootURL,

"PORT": 3032,

"METEOR_SETTINGS": settings

}

},

{

"name": appName + "-3",

"cwd": appPath + "/bundle",

"script": "main.js",

"env": {

"HTTP_FORWARDED_COUNT": 1,

"ROOT_URL": rootURL,

"PORT": 3033,

"METEOR_SETTINGS": settings

}

},

]

};

The files are stored within our Meteor project in the .deploy folder.

Try Passenger.

My guess is the article poster is maxing his DB.

Your post was super valuable? You don’t even know how to scale your pub/sub problems. Pfft.

It’s not. If he uses quad instances all works fine without touching his db size.

A little shocked that only 4 instances handle this amount of traffic. You never hit limits with this setup?

I’m sort of thinking this might be something going on in the request/response layer or sessions initialization. Could be a poorly performing encryption algorithm, a bottleneck in the FS temp area, etc… since the problems occur during burst logins it could also be something about the way the accounts system is sending/retrieving/storing authentication keys.

Honestly, this would be a difficult problem to debug without direct access to all of the server metrics and logs.

Nope, we are using this configuration since December 2016 and everything is working fine - but we can’t run the MongoDB + Meteor app on the same instance, this would hit the limits  . But paying 100 Euros for this size of connections is pretty fair. We’ve used PHP + Apache before and had to run 2 dedicated servers.

. But paying 100 Euros for this size of connections is pretty fair. We’ve used PHP + Apache before and had to run 2 dedicated servers.

A couple more thoughts and clarifications:

@elie @tomsp When I say “simultaneous logins” I’m talking about “first time loads” of my app, at the exact same time (+/- a few seconds), with users who have never loaded the app before. And I’m not actually talking about a Meteor.login call because my app doesn’t require a Meteor.login. Similar to todos, a user hits my app and there’s some default data that loads based on the URL they entered. Definitely not the same as “concurrent users”. As far as a lot of concurrent users, on one Compact container my app can actually load 300 users, slowly, and it works fairly well. Here’s a graph demonstrating…

Though, I found about 300 users was the max before the Compact container crashes when all my test users do something at the same time (e.g. create a document) - and this simultaneous behavior is typical of my app’s usage. This isn’t bad for one Compact container. In other tests I was able to load 650 users on one Compact container with plenty of memory, CPU, etc. If I weren’t having these test users all do something at the same time in the test, the container would probably handle that many users fine (that’s my theory at least - should probably test this).

I was thinking that all this may not actually be that poor of performance. Handling a burst of 40 first-time hits to your app at the same time isn’t bad for a Compact (0.5 ECU) container. The typical app (that doesn’t have my crazy use-case OR doesn’t have some publicity event that triggers a bunch of instant usage) would likely never hit 40 simultaneous first-time downloads until the app had a much, much higher amount of traffic. Your simultaneous first-time download count would be much lower at any given time - i.e. it would take having thousands of organic users to get to 40 simultaneous first loads within a few seconds. So if you ratchet up to a Standard or Double container, you’re going to be fine for a long time. Well, as long as you don’t end up on Product Hunt, Slashdot, etc. ![]() (which has happened to users on this forum and their app consequently DOS’s hence this thread).

(which has happened to users on this forum and their app consequently DOS’s hence this thread).

@hwillson There is still perhaps an issue here. I blew up a graph of the Redline13 Average Response Times for the todos app with all the various assets that get downloaded (HTML, JS, CSS, fonts, images, etc.) They all load really quickly except for the one JS file. That’s the outlier. I was mistakenly thinking there was more than one Meteor JS file that gets downloaded, but as @raphaelarias said, there’s a single big one. The issue is when I have this “burst of users” all those assets load very quick, except the one JS file. You can see them in the below graph (and in my above graphs). All those colored lines as the bottom are those various assets. And you can see, they’re still loading fast in the graph compared to the one main JS file that’s the outlier growing linearly in delay. With that said, the JS file is much larger than the other assets at 541KB (most of the others are less than 20KB and some are only a few bytes). This JS file matches exactly to the delay you can witness when loading the app. That delay is the population of data into your app templates. I should say, it’s the completion of loading of data into your templates because you can see in your app (both in my app and todos) that the data slowly loads, template by template, and populates over the thirty to sixty seconds. How Redline13/PhantomJS knows that the data hasn’t loaded and how that relates to the JS file - I don’t know. That’s for the MDG devs. I don’t know if that JS file simply doesn’t load entirely until the data is ready and/or it’s waiting for some return value from that JS file? This delayed behavior is the issue that I would report. Why does everything else load fairly fast but the data? Even under major load? It’s not the database @iDoMeteor because when you crank up CPU, the data loads super fast as expected. Perhaps it’s something to do with the “data querying and deliverying” process getting blocked by the Meteor server trying to download all those 541KB JS files to all the simultaneous users. If it weren’t for this outlier, the load times of all the other assets (colored lines at the bottom of the graph) are actually fairly reasonable given the simultaneous hits. These smaller asset response times are more in line with what I would expect.

@raphaelarias The Cloudflare caching is promising, especially given the above. ![]() I will definitely try and test. So the idea is that you identify all assets (HTML, JS, images, etc.) and have Cloudflare cache these and deliver them before your Meteor server gets the chance? It seems the only downside to this is when you update your app, you’ll have to wait for Cloudflare to re-cache your JS asset right? Have you tried this and it works for you? AWS CloudFront can do something similar right? It would be cool if there were some way to tell your Meteor app about your own CDN to deliver the big JS file and not rely on a middleware service to intercept the traffic, but that’s cool if it works.

I will definitely try and test. So the idea is that you identify all assets (HTML, JS, images, etc.) and have Cloudflare cache these and deliver them before your Meteor server gets the chance? It seems the only downside to this is when you update your app, you’ll have to wait for Cloudflare to re-cache your JS asset right? Have you tried this and it works for you? AWS CloudFront can do something similar right? It would be cool if there were some way to tell your Meteor app about your own CDN to deliver the big JS file and not rely on a middleware service to intercept the traffic, but that’s cool if it works.

@XTA Your hosting setup looks really cool. If I find I will need to scale way up, I’ll probably have to move off of Galaxy for costs reasons. I do like Galaxy though for its metrics feedback (and now with Meteor APM especially) and the ability to scale up container counts with one click is amazing. And helpful in my use-case because I can ask clients when their large-audience event is happening and then I can just scale up containers temporarily to serve the crowd. The problem is this is annoying, non-scalable with a lot of events, and just generally prone to forgetting and/or leaving a ton of Quad containers turned on. But it works for now, until I figure out how to get better performance with less CPU power. What country is OVH in? Do they use their own cloud or are they built on top AWS, etc.? Is it easy for you to add more “containers” if needed?

@waldgeist Yeah I don’t think DDP Limiting would help here because it’s for per connection rate limiting which isn’t my issue.

Yes. The good thing about Cloudflare vs Cloudfront for example is that Cloudflare does it automatically for you. If they don’t have it on cache, they fetch from your server and next time CLoudflare will serve the file, not your server. Your server in this case would have only to handle the HTML (I think you can force Cloudflare to cache HTML) and the DDP connections.

For optimal caching, set the Page Rules (required), use Argo (faster caching due to server proximity) and the Pro plan, which will give decrease missed requests (when Cloudflare does not serves the file). In general should be below 30 dollars per month if you use both Argo and the Pro plan, otherwise it is free

Yes. You can, after the deploy, access your app, to force Cloudflare to re-cache it (if you have no Hot code reload and no user connected). And after it’s cached (it takes only one user to cache it), you are good to go.

We use it in production and made our app faster. As now it’s the fast CDN of Cloudflare serving static files not Galaxy (the way it should be).

Not as transparent as Cloudflare though, but with much more control.

I think there is something you can set on Meteor to deliver static files from a CDN, but I’m not sure about the JS file.

Dynamic imports can help it too, if your JS file is too big.

Galaxy does not have an API or auto-scaling, but it’s a Meteor app, you can try to reverse engineer it and do it yourself.

EDIT: I just tested it: Our JS file has 1.3MB in size (after some optimisations). Without Cloudflare (or with the flag marked as EXPIRED) it takes 3.5s to download (in a 150Mbps connection), with Cloudflare, it takes 969ms (HIT) (in a second test it was 465ms).