Hello, I am working in a monitoring system where I have to monitor about 1000 IoT devices. Actually, these devices are “users” of my meteor app, where they are configured in Single Board Computers each one. So, I used the package of socialize:user-presence to monitor their activity (if they’re online or offline).

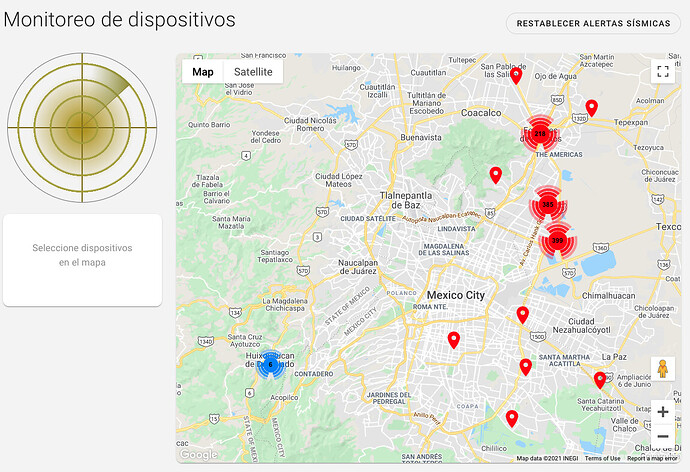

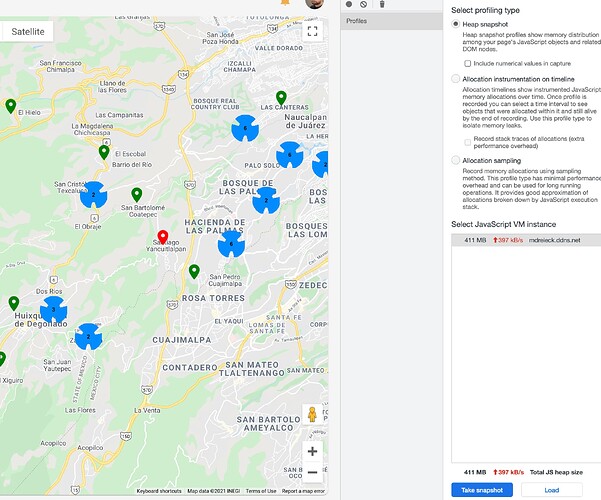

I did a publication tu return all these devices and visualize them in a map, where I can identify if any of them are online or offline. This is a preview of that screen:

*I used a plugin of google maps to do clusters of markers when they’re very together.

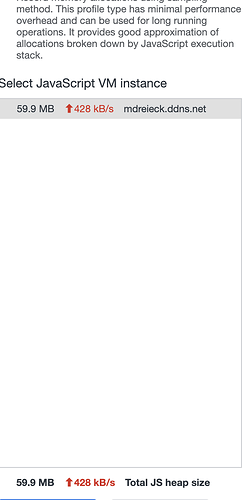

I noticed that in a virtual machine of aws, the first time to load this data is fine, but the next times it starts to get very slow all the system (it takes a long time to log in or see some other screen), and I guess that it is caused by the publication because I saw the database logs and I identified that the query is called several times and I think this provoke a bottleneck.

[root@ip-172 monitoreo-sismos]# docker-compose logs -t --tail=1 mongo

mongo | 2021-02-15T22:58:35.448582052Z 2021-02-15T22:58:35.448+0000 I COMMAND [conn4] command monitoreo.users command: getMore { getMore: 8965079632326770040, collection: "users", batchSize: 1000, lsid: { id: UUID("4ce476cd-c250-4b8e-8873-7201fa52f2c5") }, $db: "monitoreo" } originatingCommand: { aggregate: "users", pipeline: [ { $match: { profile.profile: "device", profile.isDeleted: false, profile.idCompany: "mjifJahSjrfnvsvXY" } }, { $lookup: { from: "users", localField: "profile.idCompany", foreignField: "_id", as: "company" } }, { $unwind: { path: "$company", preserveNullAndEmptyArrays: true } }, { $lookup: { from: "audios", localField: "profile.defaultAudio.idAudio", foreignField: "_id", as: "audio" } }, { $unwind: { path: "$audio", preserveNullAndEmptyArrays: true } } ], cursor: {}, lsid: { id: UUID("4ce476cd-c250-4b8e-8873-7201fa52f2c5") }, $db: "monitoreo" } planSummary: IXSCAN { profile.idCompany: 1 } cursorid:8965079632326770040 keysExamined:314 docsExamined:314 cursorExhausted:1 numYields:3 nreturned:904 queryHash:A300CFDE planCacheKey:A2B33459 reslen:1861557 locks:{ ReplicationStateTransition: { acquireCount: { w: 3621 } }, Global: { acquireCount: { r: 3621 } }, Database: { acquireCount: { r: 3621 } }, Collection: { acquireCount: { r: 3620 } }, Mutex: { acquireCount: { r: 3618 } } } storage:{} protocol:op_msg 163ms

[root@ip-172 monitoreo-sismos]# docker-compose logs -t --tail=1 mongo

Attaching to mongo

mongo | 2021-02-15T22:58:43.741503153Z 2021-02-15T22:58:43.741+0000 I COMMAND [conn5] command monitoreo.users command: getMore { getMore: 4099534238120887219, collection: "users", batchSize: 1000, lsid: { id: UUID("4ce476cd-c250-4b8e-8873-7201fa52f2c5") }, $db: "monitoreo" } originatingCommand: { aggregate: "users", pipeline: [ { $match: { profile.profile: "device", profile.isDeleted: false, profile.idCompany: "mjifJahSjrfnvsvXY" } }, { $lookup: { from: "users", localField: "profile.idCompany", foreignField: "_id", as: "company" } }, { $unwind: { path: "$company", preserveNullAndEmptyArrays: true } }, { $lookup: { from: "audios", localField: "profile.defaultAudio.idAudio", foreignField: "_id", as: "audio" } }, { $unwind: { path: "$audio", preserveNullAndEmptyArrays: true } } ], cursor: {}, lsid: { id: UUID("4ce476cd-c250-4b8e-8873-7201fa52f2c5") }, $db: "monitoreo" } planSummary: IXSCAN { profile.idCompany: 1 } cursorid:4099534238120887219 keysExamined:314 docsExamined:314 cursorExhausted:1 numYields:3 nreturned:904 queryHash:A300CFDE planCacheKey:A2B33459 reslen:1861557 locks:{ ReplicationStateTransition: { acquireCount: { w: 3621 } }, Global: { acquireCount: { r: 3621 } }, Database: { acquireCount: { r: 3621 } }, Collection: { acquireCount: { r: 3620 } }, Mutex: { acquireCount: { r: 3618 } } } storage:{} protocol:op_msg 168ms

[root@ip-172 monitoreo-sismos]# docker-compose logs -t --tail=1 mongo

Attaching to mongo

mongo | 2021-02-15T22:58:52.055748001Z 2021-02-15T22:58:52.055+0000 I COMMAND [conn4] command monitoreo.users command: getMore { getMore: 1502150716348272659, collection: "users", batchSize: 1000, lsid: { id: UUID("ee7eb77e-8842-49a9-9a4b-579dbe76d721") }, $db: "monitoreo" } originatingCommand: { aggregate: "users", pipeline: [ { $match: { profile.profile: "device", profile.isDeleted: false, profile.idCompany: "mjifJahSjrfnvsvXY" } }, { $lookup: { from: "users", localField: "profile.idCompany", foreignField: "_id", as: "company" } }, { $unwind: { path: "$company", preserveNullAndEmptyArrays: true } }, { $lookup: { from: "audios", localField: "profile.defaultAudio.idAudio", foreignField: "_id", as: "audio" } }, { $unwind: { path: "$audio", preserveNullAndEmptyArrays: true } } ], cursor: {}, lsid: { id: UUID("ee7eb77e-8842-49a9-9a4b-579dbe76d721") }, $db: "monitoreo" } planSummary: IXSCAN { profile.idCompany: 1 } cursorid:1502150716348272659 keysExamined:314 docsExamined:314 cursorExhausted:1 numYields:3 nreturned:904 queryHash:A300CFDE planCacheKey:A2B33459 reslen:1861557 locks:{ ReplicationStateTransition: { acquireCount: { w: 3621 } }, Global: { acquireCount: { r: 3621 } }, Database: { acquireCount: { r: 3621 } }, Collection: { acquireCount: { r: 3620 } }, Mutex: { acquireCount: { r: 3618 } } } storage:{} protocol:op_msg 168ms

Logs of node app container doesn’t show any error. (shows only the Starting app. . .)

Also, I displayed these devices in other view where I have a datatable, and in this case, I fixed it using the server side pagination technique, but in the monitoring view I wonder which could be a better solution to observe all the devices without overload the server?

Notes:

-

Specifications of virtual machine: 2vCPUs, 4 GB of RAM, 80 GB HDD, CentOS, deployed with docker image (disney/meteor-base)

-

In my computer doesn’t happen that issue because I guess is due to specs of my machine. Macbook Pro, core i7 six core, 16gb ram, ssd

-

Meteor 2.0, Mongo 4.4, Node 12.18, Vue 2.6.11 (akryum)

-

I am using indexes in certain fields (where I do queries to database), and also, I am considering to optimize my query, to return only the necessary information, since my current query is:

const monitoringDevicesPublication = new PublishEndpoint('monitoring.devices', function(idCompany, deleted = false) {

let queryMatch = {

'profile.profile': StaticProfiles.device.name,

'profile.isDeleted': deleted

};

if (idCompany) {

queryMatch['profile.idCompany'] = idCompany;

}

//TODO: Optimize query removing innecesary fields with $project operator

ReactiveAggregate(this, Meteor.users, [

{

$match: queryMatch

},

{

$lookup: {

from: 'users',

localField: 'profile.idCompany',

foreignField: '_id',

as: 'company'

}

},

{

$unwind: {

path: '$company',

preserveNullAndEmptyArrays: true

}

},

{

$lookup: {

from: 'audios',

localField: 'profile.defaultAudio.idAudio',

foreignField: '_id',

as: 'audio'

}

},

{

$unwind: {

path: '$audio',

preserveNullAndEmptyArrays: true

}

}

], { warnings: false });

});

Hope someone give me an advice to this situation. Thank you for advance.