@ivo if you have private documents, you will probably need to use something like ostrio:files. If you just need a CDN, eventually with signed URLs, you drop files in S3 and get them via CloudFront.

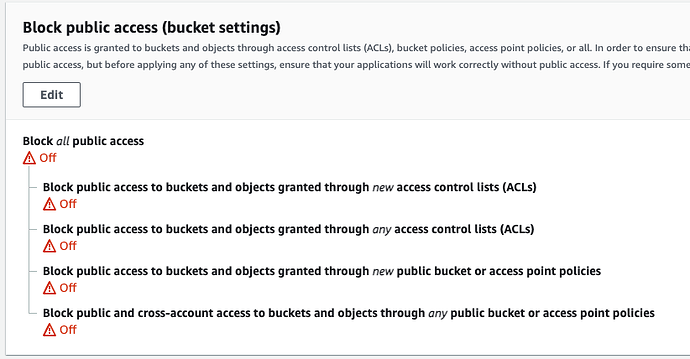

When you link your CloudFront to S3, you have an option (in Cloudfront) to only allow access to files via CloudFront. When you check that, the Bucket Policy gets updated and all links to S3 files become useless.

CORS:

[

{

"AllowedHeaders": [

"Authorization",

"Content-Type",

"x-requested-with",

"Origin",

"Content-Length",

"Access-Control-Allow-Origin"

],

"AllowedMethods": [

"PUT",

"HEAD",

"POST",

"GET"

],

"AllowedOrigins": [

"https://www.someurl.com",

"https://apps.someurl.com",

"http://192.168.1.72:3000",

"http://192.168.1.72:3004"

],

"ExposeHeaders": [],

"MaxAgeSeconds": 3000

}

]

Bucket Policy:

{

"Version": "2008-10-17",

"Id": "PolicyForCloudFrontPrivateContent",

"Statement": [

// Next one, by allowing Cloudfront, disallows everything else

{

"Sid": "1",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::cloudfront:user/CloudFront Origin Access Identity xxxxxxxxxxxxxx"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::your_bucket/*"

},

{

"Sid": "S3PolicyStmt-DO-NOT-MODIFY-1559725794648",

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::your_bucket/*",

"Condition": {

"StringEquals": {

"s3:x-amz-acl": "bucket-owner-full-control",

"aws:SourceAccount": "xxxxxxxxx"

},

"ArnLike": {

"aws:SourceArn": "arn:aws:s3:::your_bucket"

}

}

},

{

"Sid": "Allow put requests from my local station",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::your_bucket/*",

"Condition": {

"StringLike": {

"aws:Referer": "http:192.168.1.72:3000/*"

}

}

}

]

}