Hi folks,

we’re currently running our AWS instance on a t2.medium instance, since we don’t have thousands of concurrent users. We’re using mup for deployment, with that single instance.

However, we expect a major user burst tomorrow. We can’t estimate how many of them might access the app at the same time, but we want to take some precautions.

I am thinking about the following strategy:

- Spin a larger instance tonight, as a replacement for the t2.medium, e.g. t2.large

- Have an even larger instance as a backup, e.g. t2.xlarge

- If the load expects our expectations, hot swap the instances by re-assigning the Elastic IP to the larger one

- Both servers will use the same Mongo DB instance on ATLAS

I wanted to ask if there are any caveats in this approach? Especially, what will happen to users who are currently signed in?

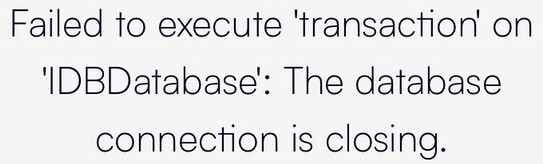

Will Meteor just re-establish the WebSocket connection (as it would normally do if the connection was down for a moment, like on mobile), or will the users be logged out completely?

And is there a way to handle this better, without changing the overall infrastructure too much?