It is still unclear what tools are available to unit-test an app. Velocity was great with that. Does anybody know a good unit-testing tool (app level, client + server) for Meteor?

Before Meteor 1.3, I think the best thing is to split your app into packages and use test-packages. That’s what we are doing so far in the Todos example app we’re building for the guide: https://github.com/meteor/todos/blob/master/packages/lists/lists-tests.js

In Meteor 1.3 I think it will be easier to do this without going through the hassle of building Meteor packages, since you will be able to unit test individual ES2015 modules. Before we release the guide with 1.3 we’ll migrate the Todos app to that structure, so there will be a good set of example tests to look at.

We’re also writing a whole ton of tests internally for the Galaxy UI, which won’t be open source but will be a good testbed for the new testing features we’re working on for 1.3.

With end to end testing you will test the application at the user interface level typically by having a robot fill in inputs and press buttons and then it asserts that the application state has changed (such as text on the page)

Typically this will test all layers of the application including the database so it’s very close to what a real user is experiencing.

Most end to end frameworks don’t have any knowledge of the web framework you’re using but some include hooks to setup and tear down the app so that its in an expected state.

I was afraid of the test-packages answer …

Do you mean we’ll have to write unit-tests inside our own modules? How will the tests be automatically run? And what if we don’t use use modules? I’m even more confused, could you give us a short example?

A test-driven Todos-app tutorial (just like in Phoenix) would be really great by the way

If you don’t use modules in 1.3 then you’ll have to test the same way you do today.

However, if you use 1.3 modules (and you should), you can use any JavaScript library you’d like to unit-test your module. You can just write a test file and in that file import your Meteor module, then you can stub/mock out any dependancies outside of the module as needed. Test runners like Jasmine, Mocha, Tape, etc… have watchers that can run in the background and will finish in milliseconds.

I’m planning on recording some screencasts migrating a 1.2 project (react-ive meteor) to 1.3 while adding unit tests, end-to-end tests and a few integration tests. Stay tuned!

I’ve just found in this one month old post that there are lots of great ideas being implemented (well, I hope so).

Also this very interesting article is worth reading about the importance of unit-tests “vs” end-to-end tests:

http://www.alwaysagileconsulting.com/articles/end-to-end-testing-considered-harmful/

This is spot on, UI level testing is harmful. Most e2e frameworks try to focus too much on the UI and end up building a huge pile of technical debt. These tests look more like test-scripts rather than executable specifications. Test scripts come after the coding as an afterthought and almost always end up getting disabled because they go out of date and nobody wants to update them. Executable specifications on the other hand are a development level technique and drive development. The latter is a long lived practice that is self maintaining in committed teams.

I strongly recommend you watch this presentation that shows some pioneering work on Modeling by Example. This technique allows you to apply the exact right number of tests at the e2e, acceptance and unit levels (it can be done with Mocha/Jasmine not just Cucumber).

Another good read on this subject is Google’s testing blog post on end-to-end testing where they say

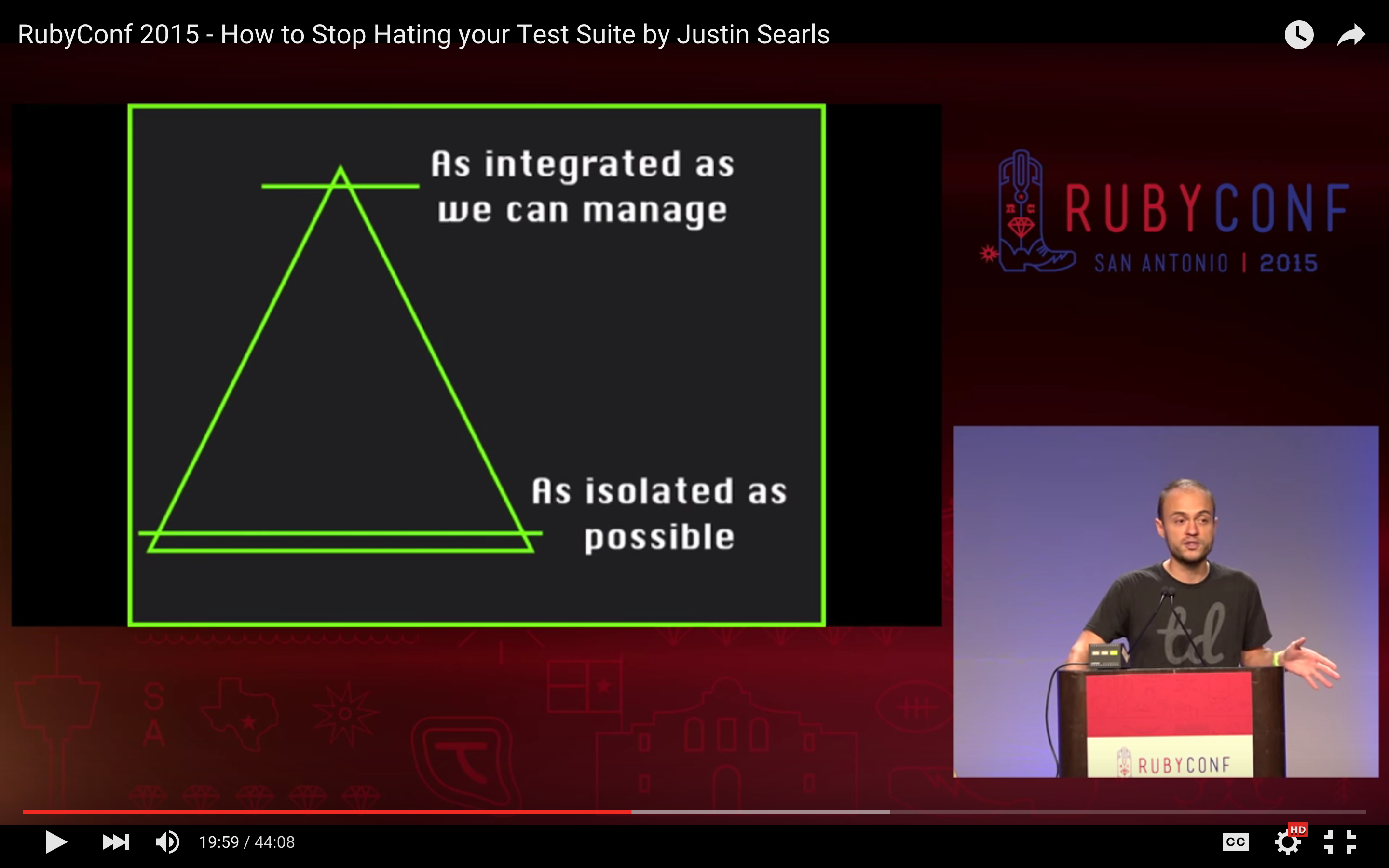

Google often suggests a 70/20/10 split: 70% unit tests, 20% integration tests, and 10% end-to-end tests. The exact mix will be different for each team, but in general, it should retain that pyramid shape.

We have built Chimp on the exact principles of the testing triangle and it currently allows you run e2e and acceptance (integration) tests.

For unit testing, then @SkinnyGeek1010 is absolutely right with this statement:

Unfortunately even with a fresh skillsmatter account I get “Because of its privacy settings, this video cannot be played here.”

Sounds great  I’m really looking forward to seeing this because I’m not sure how to do unit testing with Mocha outside the dying Velocity.

I’m really looking forward to seeing this because I’m not sure how to do unit testing with Mocha outside the dying Velocity.

Meteor (with CoffeeScript) made me switch from the Python/Django universe to the Javascript universe. Modules are everywhere in Python, so are unit-tests (assert is a Python core keyword).

But in this new Javascript universe I’m still wondering what way I should remix my Metor apps into modules. I can’t wait to see the following in the Todos app: [quote=“sashko, post:30, topic:15084”]

you will be able to unit test individual ES2015 modules. Before we release the guide with 1.3 we’ll migrate the Todos app to that structure, so there will be a good set of example tests to look at.

[/quote]

[quote=“awatson1978, post:17, topic:15084”]

Typical. This kind of language is what drives women away from the tech industry.

[/quote] I lacks words… Why is this necessary?

So I guess I am looking for a unit testing framework. Sorry, but I am not too deep into these testing terms

Relax. I also did not get this.

who say’s I’m not relaxed?  and I totally get it. I just think this kind of ranting about gender/tech belongs in it’s own post, as it has nothing to do with the stated topic.

and I totally get it. I just think this kind of ranting about gender/tech belongs in it’s own post, as it has nothing to do with the stated topic.

to get back to the subject - What do you guys think Meteor will advocate for their testing section in the Meteor guide?

Searching for .mp4 in the source and pasting that link in a new window solved it here.

(There’s a related blog post here.)

I’ve just started using Chimp with Cucumber and WebDriverIO (Selenium) for a few complicated, automated UX tests. Works great for that and was very quick to set up even though I only have (basic) experience with Mocha (using mike:mocha(-package)).

With just those few tests in place, I’m already wanting to decouple test logic as much as possible, to reuse within that and other testing solutions.

On the value and scope of testing (i.e. pyramid structure), I think everyone agrees it’s very context-dependent. Maybe 70/20/10 is a good starting point until your project informs adjustment. Here’s a timed link to a lucid, mere mortal summary of the DHH, Martin Fowler and Kent Beck hangouts, which touches on some of that.

Hmmm… I must be the only one whose sarcasm detector was going off the charts.

edit: I stand corrected

This is the same video here: https://vimeo.com/149564297 - it’s very similar to the blog post, but it shows a LOT more detail of how to do it in reality.

Emily is absolutely right and has a better idea on the problem than DHH does in my opinion. I really like the TextTest tool she created. You can achieve some very similar results by using subcutaneous testing to capture the data that will be used by the UI, instead of testing the UI itself. This can’t work if you put a ton of logic into the view, however if you write your code using UI components, this is awesome as you can unit test the UI components like crazy (with something like React Test for example). Subcutaneous testing draws the boundary above units and below the UI therefore they are considered integration / acceptance tests, without the penalty of being sensitive to UI changes.

Emily was also right in saying that the education needs to happen both with novice and advanced developers to show how acceptance testing is properly done and how it’s different to end-to-end testing.

Just my 2c!

I’m really curious on your thoughts of using an ‘hourglass shape’ that heavily relies on unit tests and end-to-end tests to make sure the units are ‘plugged together’ correctly. I’ve tried both and the hourglass shape has (so far) lead to faster iteration and more confident releases (well more unit tests than e2e but few integration tests). The video snippet at the bottom is what prompted me to share as it seems some other people use this too.

Here are my experiences:

tl;dr

Functional programming has made unit testing faster, easier and more reliable, and therefore don’t require as much integration tests. e2e test have become less flaky in recent years and not testing design/CSS/DOM in e2e tests helps keep it less brittle. It ends up looking like 90% / 5% / (all of the happy paths & a few critical failure paths)

end-to-end testing has gotten a lot less 'flaky' (for me) in the past year. Currently I don't have near the number of issues I did 3 years ago trying to get my 'working feature' to pass green. With the right abstractions tests (mostly) only broke when user behavior changed (as expected). Keeping the CSS out of them helped the most.

Having the right abstractions for clicking a button or filling an input also helped. Filling an input by it’s label name or clicking a button from it’s text name (instead of JQ/XPath selectors) led to less tests failing due to design changes. Though here if the text of those change, it breaks. However, these only take seconds to update and keep the DOM out of the tests so that the HTML structure can change as needed so I consider it the lesser of two evils.

80% of the time my integration tests gave me the most issues. Perhaps this is because of Meteor. They seemed to be the most brittle with refactoring and provided a false sense of security that my ‘units’ were working together correctly. Trying to test/mock out all the dependancies correctly was tedious as well (it’s clear what to mock/stub in a unit test).

Some pieces like models are great for integration tests because I want to make sure it’s working with my DB correctly or things like sending out an email when creating a user can’t be tested with end-to-end tests.

Migrating to functional programming has made my test code the most maintainable and made testing easy in general. It allowed me to use unit testing very heavily and effectively as I didn’t have to worry about globals and instances of objects that I couldn’t test.

React and Redux are also very functional and allowed me to unit test the tricky bits of UI with heavy logic and Redux allows one to unit test user interactions without the UI.

Some counter arguments may be that my apps were had more user facing functionality and perhaps a more backend service heavy app would require more integration tests. Also I’ve only been using this approach for 8 months so you could argue that the e2e tests haven’t had enough time to let atrophy sink in (although the UI has changed quite a bit).

Anyhow i’d love to hear any pushback/poking holes in this as it will help me build better tests ![]()

[How to Stop Hating your Test Suite](https://www.youtube.com/watch?v=VD51AkG8EZw) *(queued up to the hourglass part)*

Because there is a year-long history of some Velocity team members playing favorites, scrubbing contributions from contributors in a biased manner, adding sexist and harassing language to package names and APIs, and making Velocity inappropriate for use in enterprise environments. There was a blatant power grab around the ‘officially sanctioned’ testing framework, and it played out in a typical sexist boys club manner. Typical.

Now that StarryNight can produce basic FDA compatible documentation, and provides an isomorphic API that is committed to avoiding non-inclusive language, I’m beginning a call-to-action to scrub any documents that go into the guide of language that can get flagged by an HR department.

I respect the fact that there are some people with egos and reputations on the line who want to save face. So, I’m not doubling down on the muckraking, and am giving them time to update APIs, documentation, and package names. But they certainly didn’t respect my contributions during Velocity development, so I’m not backing down either. Calling out inappropriate language is necessary in order to raise the bar with regard to professional language.

And I’m not just complaining from the peanut gallery. We’re providing an alternative solution, with working examples and documentation via an entire release track. Velocity may have been designed with industry ‘best-practices’ in mind; but if industry best practices mean bro-culture and harrassing language, you better damn believe that some of us will rally the funds and resources necessary to produce an alternative option that complies with federal regulatory approval processes and has inclusive language.

Having worked 15 years in QA and testing, and been involved with clinical trials and FDA approval of drugs and devices, in all that time, I never once had to ‘spy’ or ‘mock’ anything, nor use ‘cucumbers’ or ‘jasmine’ in a hospital or clinic. Not before Meteor. So I’m calling that language out as not being a best practice. It’s certainly not the healthcare industry’s best practice.

The state of the testing ecosystem is that there is now an FDA ready testing solution available. Some of us want to see the language cleaned up in the broader testing ecosystem; so we’re beginning the process of stating grievances and proposing an alternative solution. From here on out, there is a higher standard available.

ps: I personally don’t have any particular problem with chimp, or the technologies behind velocity and chimp. StarryNight uses most all of the same technologies, in fact. I and my clients do care about federal regulation 21CFR820.75, having verification and validation tests, a clean isomorphic API, printing test results to white paper, and being able to run the test runner on common 3rd-party continuous integration service providers. We’re starting from the assumption that there will necessarily be thousands of validation test scripts as part of any app, and clients will want to shop around for CI servers. That’s how our industry operates. But since the self-appointed leaders of the ‘official testing framework’ decided those concerns weren’t valid, were speaking up, putting our money where our mouth was, and providing an alternative testing solution for the clinical track.

Justin reminds me of Jesse Pinkman from Breaking Bad

Someone sent that presentation to me a while back, and I had it on my to-watch list, so I just watched the whole thing now and have to say it’s one of the best presentations about testing that I’ve ever watched, only second to Konstantin’s Modeling by Example breakthroughs. Let me explain why (which will also answer your questions)

tl;dr

You can still maintain the testing pyramid and reap all the benefits of Justin’s presentation if you start with acceptance tests to drive the development of your app at the domain layer, then create a UI on top and write a handful of UI based tests.

The testing pyramid came from a measurement that took into account the proportions of test type in relation to one another. A direct correlation was observed between testing strategies that did not have the proportions of a pyramid, and those that were painful, difficult to maintain and slow. The testing pyramid is a guideline that is based on the symptoms of test strategies. It does not really tell you a huge amount about the cause at a glance.

Justin’s presentation is awesome at talking about the cause and is 100% correct in every single thing he said. In particular, he speaks about what frameworks provide and that doing integration testing is tightly-coupled to what the infrastructure that a framework provides. In Rails it may be Active Record and in Meteor it would be Pub/Subs. He stipulates that applying testing at this level creates redundancy with unit testing for very little gain, if any. In that respect, he’s right that it is pointless and better to trust that a framework is doing it’s job. Of course the units are not enough so he says you should absolutely have some e2e tests that make sure the whole thing works.

It’s what Justin didn’t say that I find most interesting! He has in my opinion missed out the domain. All of the tiered application architectures speak of some sort of domain layer (AKA a service layer) which handles business or domain logic. This layer is what uses the framework infrastructure as a means to an end, which is creating value for the end consumer (user or system).

The domain consists of models that abstract the business domain of the real-world into concepts and entities, and the functions (or services) that transform the models based on interactions (user or systems) with the the business logic. You are already doing this in your functional programming approach which is awesome! What I love about document-based db’s like Mongo is that they usually model the domain nicely without requiring an ORM - but I digress!

If you imagine an application that has a completely speech based UI, then you can imagine simply talking to the domain and it responding to you. The UI therefore is a “joystick” to control the domain (as Konstantin mentions in his talk). So by using the domain, you get the value of the application and the UI is one way for you to get that value.

Acceptance testing is about making sure the service layer is using the domain entities and that the infrastructure provided by framework is working. This is much more than infrastructure and configuration testing, which is what Justin is advising against. Konstantin talks about this in the same talk and refers to the infrastructure leakage that occurs when you try to model more than the domain.

On the subject of tools being much better these days, and on your approach of using UI text as the locator strategy instead of the more brittle CSS and ID rules, these are 100% awesome practices to be employing and indeed reduce flakiness. Ultimately, what you’re doing is you’re doing your domain testing through the UI. This will work out for you, especially if you are not in a huge team where the shared understanding about what tests are doing is more difficult to communicate. Typically tests that run through the UI and click a few buttons don’t tell you WHAT an app is doing, they tell you HOW it’s doing it. This creates a translation cost for the next developer to pick up, where as the domain language does not have this cost. So there is more to UI testing than flakiness.

I was a HUGE proponent of outside-in testing up until quite recently when I have discovered there’s a much better way. When creating new features, to start a discovery process at the domain as we cover our domain fully, then we paint the UI on top. Using some polymorphism, the exact same acceptance tests can have a UI based version that actually goes through the UI. This ends up with a triangle and not an hour glass.

Does that even matter? If they don’t want to accept contributions, isn’t it their right?

Could you provide some examples? I haven’t heard of these, and I want to understand what you’re talking about

Why do you care about the “offical test framework” title, and do you feel like there’s a better candidate than Velocity? It REALLY hard for me to actually believe that the fact that you’re a woman has any influence on any of this. Then again, I don’t want to impose ignorance on a real issue - it’s just really hard for me to believe people can be clever enough to write code, and still be dumb enough to think women are inequal to men.

I think the best thing is to split your app into packages and use test-packages

The todos example seems to use a special package just for its own testing?

https://github.com/meteor/todos/blob/master/packages/lists/package.js#L23

which uses some other test packages.

https://github.com/meteor/todos/blob/master/packages/todos-test-lib/package.js

Is that a best practice or just the most practical way to decouple the shifting landscape around tests?