Justin reminds me of Jesse Pinkman from Breaking Bad

Someone sent that presentation to me a while back, and I had it on my to-watch list, so I just watched the whole thing now and have to say it’s one of the best presentations about testing that I’ve ever watched, only second to Konstantin’s Modeling by Example breakthroughs. Let me explain why (which will also answer your questions)

tl;dr

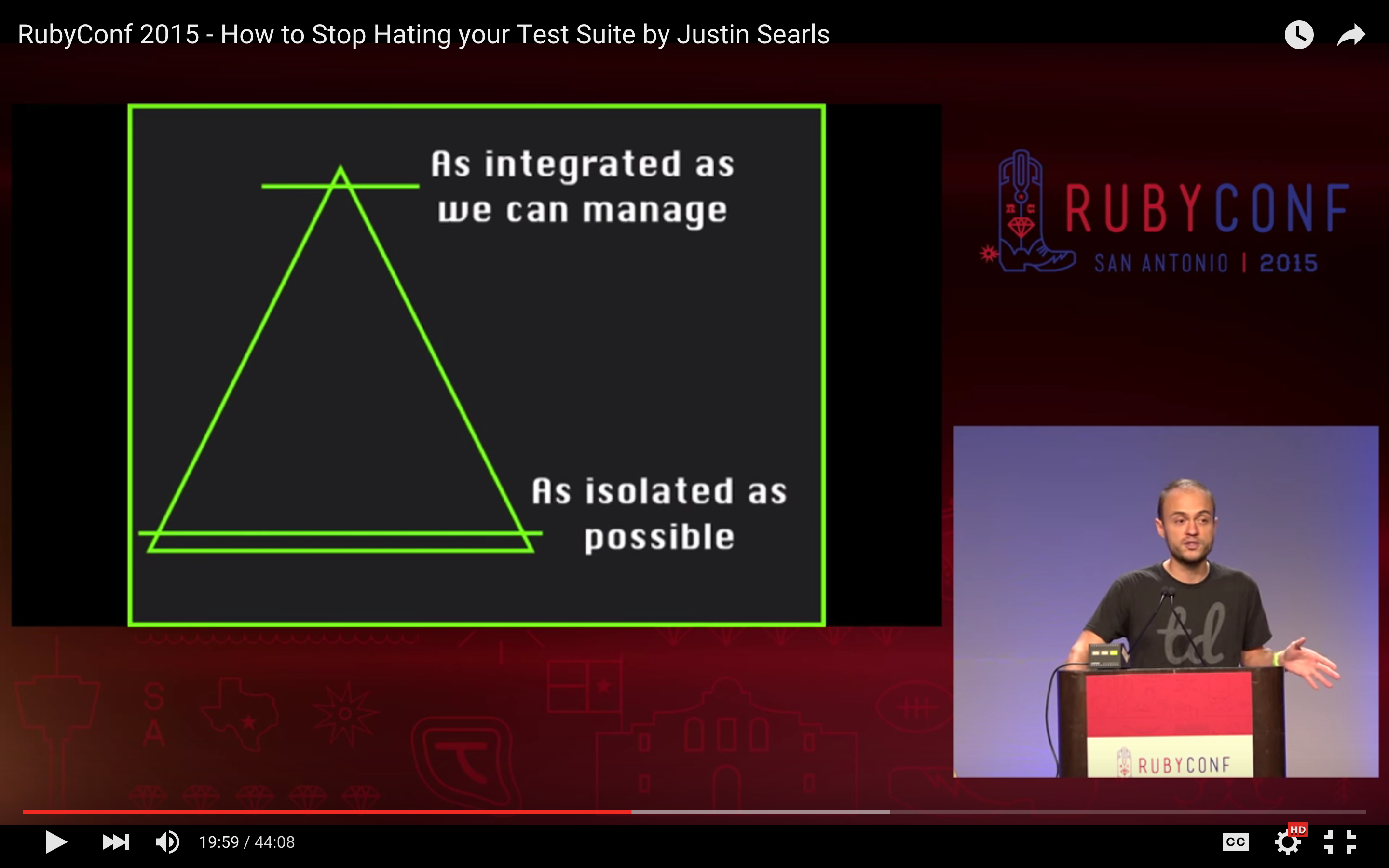

You can still maintain the testing pyramid and reap all the benefits of Justin’s presentation if you start with acceptance tests to drive the development of your app at the domain layer, then create a UI on top and write a handful of UI based tests.

The testing pyramid came from a measurement that took into account the proportions of test type in relation to one another. A direct correlation was observed between testing strategies that did not have the proportions of a pyramid, and those that were painful, difficult to maintain and slow. The testing pyramid is a guideline that is based on the symptoms of test strategies. It does not really tell you a huge amount about the cause at a glance.

Justin’s presentation is awesome at talking about the cause and is 100% correct in every single thing he said. In particular, he speaks about what frameworks provide and that doing integration testing is tightly-coupled to what the infrastructure that a framework provides. In Rails it may be Active Record and in Meteor it would be Pub/Subs. He stipulates that applying testing at this level creates redundancy with unit testing for very little gain, if any. In that respect, he’s right that it is pointless and better to trust that a framework is doing it’s job. Of course the units are not enough so he says you should absolutely have some e2e tests that make sure the whole thing works.

It’s what Justin didn’t say that I find most interesting! He has in my opinion missed out the domain. All of the tiered application architectures speak of some sort of domain layer (AKA a service layer) which handles business or domain logic. This layer is what uses the framework infrastructure as a means to an end, which is creating value for the end consumer (user or system).

The domain consists of models that abstract the business domain of the real-world into concepts and entities, and the functions (or services) that transform the models based on interactions (user or systems) with the the business logic. You are already doing this in your functional programming approach which is awesome! What I love about document-based db’s like Mongo is that they usually model the domain nicely without requiring an ORM - but I digress!

If you imagine an application that has a completely speech based UI, then you can imagine simply talking to the domain and it responding to you. The UI therefore is a “joystick” to control the domain (as Konstantin mentions in his talk). So by using the domain, you get the value of the application and the UI is one way for you to get that value.

Acceptance testing is about making sure the service layer is using the domain entities and that the infrastructure provided by framework is working. This is much more than infrastructure and configuration testing, which is what Justin is advising against. Konstantin talks about this in the same talk and refers to the infrastructure leakage that occurs when you try to model more than the domain.

On the subject of tools being much better these days, and on your approach of using UI text as the locator strategy instead of the more brittle CSS and ID rules, these are 100% awesome practices to be employing and indeed reduce flakiness. Ultimately, what you’re doing is you’re doing your domain testing through the UI. This will work out for you, especially if you are not in a huge team where the shared understanding about what tests are doing is more difficult to communicate. Typically tests that run through the UI and click a few buttons don’t tell you WHAT an app is doing, they tell you HOW it’s doing it. This creates a translation cost for the next developer to pick up, where as the domain language does not have this cost. So there is more to UI testing than flakiness.

I was a HUGE proponent of outside-in testing up until quite recently when I have discovered there’s a much better way. When creating new features, to start a discovery process at the domain as we cover our domain fully, then we paint the UI on top. Using some polymorphism, the exact same acceptance tests can have a UI based version that actually goes through the UI. This ends up with a triangle and not an hour glass.

and I totally get it. I just think this kind of ranting about gender/tech belongs in it’s own post, as it has nothing to do with the stated topic.

and I totally get it. I just think this kind of ranting about gender/tech belongs in it’s own post, as it has nothing to do with the stated topic.