I followed this thread and I was not clear whether you actually need pub-sub until you mentioned it.

Your aggregations may be slow for 2 reasons: not the right indexes and/or no limits in your joint DB query in the one-to-many or many-to-many relationships.

For Orders and Users, the links would probably be userId (Orders) to _id (Users). _id is indexed by default so this particular aggregation would be lightning fast. This is a one to one relationship. Going the other way around, things change dramatically.

“Give me all the orders of a user”. This is a one-to-many relationship and this is where things might go slow if you don’t have an index for userId (in the Orderes DB) and if you don’t have a limit (and/or pagination). Scanning through millions of orders yes … will be costly.

The way I do it, being inspired by platforms such as Facebook or Telegram - I store a lot of data on the client.

You may ask this in Perplexity or some other connected AI: “What does Facebook store in IndexedDB in the browser, under ArrayBuffers?”. You can also open FB and check the IndexedDB in the browser to see the massive amounts of that they store in the browser itself. Even if you used reactivity for the Orders, your users’ display name and avatar would best be stored in the browser (and persisted) instead of your minimongo.

The next step would be data management in the browser. For instance, if you don’t have a user(s) for an order, fetch the user(s) with a method and add them to the local DB in the browser. You can expire data and re-fetch it - for your avatar/display name updates, once in a while.

MongoDB works great with aggregations when they are done right and when the aggregation is optimised for the size of the MongoDB server used. If you are in Atlas, keep an eye on the dashboards as Atlas will suggest query optimisations where things don’t look great.

There is a point where you might need to leave MongoDB and get a SQL type of db. This point in time might be, for instance, when you need a relationship such as one-to-many-to-many. For example, “give me all the recent posts of my friends”. Not all my friends have new posts, I need to sort the posts not my friends - so I would have to query for something like give me 10 most recent posts where userId is in […5000 ids …]. The more friends I’d have, the more degraded performance.

To make it even worse, I would also want to bring, for each post, the 3 most recent comments  (a one-to-many relationship deep into the one-to-many-to-many relationship in my aggregation).

(a one-to-many relationship deep into the one-to-many-to-many relationship in my aggregation).

Just to close my story here, in a one-to-one relationship (Order to User) with MongoDB, it would be really, really hard to get any performance issue.

If you used Grapher, Aggregations, Mongoose before, it comes straightforward to think in relations/data structures, and sometimes a pencil and a piece of paper helps a lot. This is where Meteor Grapher defines the relationships: grapher/lib/links/linkTypes at master · cult-of-coders/grapher · GitHub

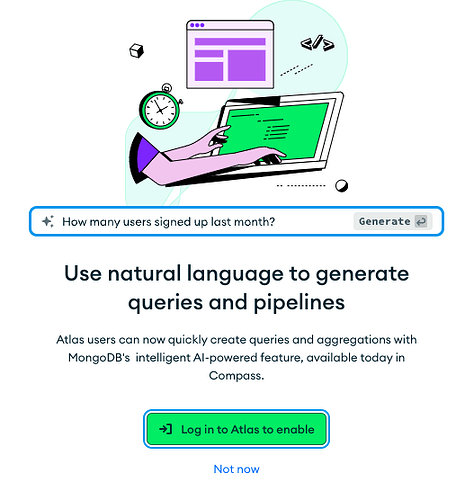

It is clear, well-documented but for some reasons, due to the syntax I had to use in the code or for some other reasons, I abandoned Grapher (after I upgraded it for Meteor 3) in favor of direct aggregations. Mongo Compass has a great visualizer for the aggregation builder, and you can also use natural language to start building your queries.