Motivation

After the Meteor 3 launch, we planned our next steps, our roadmap is public. Before introducing new features or packages to the framework, we will focus on quality checks. Alongside the new Meteor patches, we are addressing issues and starting to measure Meteor 3’s performance following this major update.

To measure performance effectively, we need a suite that tests the same behaviors in both Meteor 2 and 3. This will help us identify performance regressions and ensure future versions maintain or improve performance. This tool is new for us, and combined with other performance measurement plans, it should prevent any decline in performance.

Since Meteor 3.0.1, we have been developing a tool for basic performance measurement, meteor/performance. This repository also serves as inspiration for adapting scripts and performing performance analysis on your own applications.

Methodology

We use Artillery to apply configurable stress tests on our test machine and Playwright to simulate interactions with Meteor apps on every initiated connection.

Our testing follows an incremental approach, starting with a simple setup before progressing to real app scenarios. Addressing issues in simpler cases allows us to tackle isolated performance problems, often leading to improvements in more complex scenarios, as they rely on the same primitives. We have observed that even in a basic setup, performance regressions related to reactivity handling happen.

Our simple setup involves two apps, tasks-2.x and tasks-3.x, that:

- Create 20 connection-scoped tasks via a button and a Meteor method.

- Remove each of the 20 tasks one by one via a button and a Meteor method.

- Display all tasks:

- Using one Meteor subscription (reactive scenario)

- Using one Meteor method that fetches them with each action (non-reactive scenario)

During stress testing, limited by the test machine capacity, 240 connections were established within one minute, averaging 4 connections per second. Each connection ran the specified processes: adding, removing, and fetching tasks in both reactive and non-reactive modes from all other connections, leading to overload.

Results

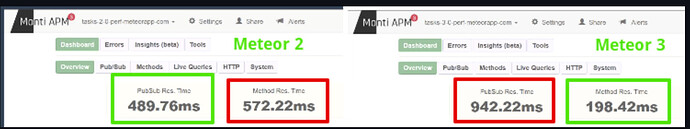

Our results indicate that:

Meteor 3 is on average ~28% faster, uses ~10% less CPU, and ~16% less RAM in a non-reactive scenario. It handled all 240 connections smoothly.

Meteor 3 is on average about the same in time, uses ~18% less CPU, and ~10% more RAM in a reactive scenario. However, it supports fewer connections per minute (180 or 3 per second), indicating a performance regression.

For more details on performance setup, machine configuration, and load options, visit the report at benchmarks/meteor2.16-vs-3.0.1

The good news is that for most processes in Meteor 3 apps, you can expect faster performance and lower resource consumption, likely due to the Node upgrade, particularly in non-reactive scenarios.

The bad news is a specific regression in processes involving live data mechanisms, Meteor 3 supported fewer connections on reactive mode. This issue appears to be poorly handled, and we are committed to fixing it to achieve at least 240 connections per minute (4 per second) on the testing machine as Meteor 2.

With the Meteor 3.0.3 release, we continued our analysis to understand the regression. We found that disabling compression can benefit both Meteor 2 and 3, particularly Meteor 3, by reducing container stress and allowing more connections per minute, aligning with Meteor 2 performance. However, while this helps, it doesn’t address the root cause of the regression, so further research and fixes are needed.

More details on how to benefit from disabling compression can be found at benchmarks/meteor2.16-vs-3.0.3-disable-compression.

Hypothesis on the Challenge

Further analysis of APM metrics indicates that the issue in Meteor 3 could be due to the event loop saturation, high async resource usage, and more frequent garbage collector activity. This can be more problematic with apps that heavily rely on reactivity and advanced methods to manage it, like publish-composite.

We will keep investigating this problem to gather more information and explore potential solutions.

Call to Action

We encourage Meteor developers to share us feedback on their application performance in Meteor 3.

- Have you noticed a regression in your reactive data?

- Do you use high-intensity reactive libraries like publish-composite?

- Do you use redis-oplog?

We are seeking more scenarios from your applications to analyze. Some of you have already reached out to us privately about your issues. We are working to identify improvements and the core fix, and we will continue to gather information and reports to better define the problem.