Well firstly I’d open up a new thread about it. Might get more responses to

the specific issue at hand.

Re-read the post. I see what you’re saying, login services takes forever to respond.

If it were me, I’d try meteor create version2 and import all my stuff. See if that helps with fresh packages.

I know after a while you end up with more crap thrown in a project than you realize. Start with a fresh cup of coffee and fresh project. See if that helps.

#Debugging…

I’m still wondering if this issue in this thread https://github.com/meteor/meteor/issues/4559 is my problem. This is strange because my app doesn’t even require the user to login. I don’t have any of the accounts packages added.

EDIT: Looks like I am using accounts-base@1.2.2 Going to look into removing this and see if my app still works as I’m not explicitly using any of the other accounts packages.

Interesting. I’ve also seen methods waiting on login a lot, but it was

usually part of a bigger issue somewhere else where the entire database was

being held up by a missing index.

BTW, why don’t you just update the entire app to 1.5? What’s holding you

back?

TL;DR: OPLOG and/or Meteor poll and diff is the issue. Will integrate redis-oplog in three weeks.

After a lot of thread-reading and Kadira metrics analysis, I think I figured out what the issue is… it’s the classic circa-2015 “OPLOG clogging everything up after about thirty users issue”. And if by any chance it’s not the OPLOG, then it’s the Meteor poll and diff that’s getting me. Sadly, it’s taken me forever to realize I’m having a problem with something that was really well known for the last two years.

I finally found some really helpful threads and realized my app was doing “high velocity” writes. All my users send an update at almost the exact same time, what’s more, they’re all updating the same document. I found a post by MDG about how Meteor 1.3 has some new tools for disabling the OPLOG and how a large number of writes on the same object can create a hotspot without maxing out the CPU which was a phenomenon I was seeing. The following pages were really helpful:

OPLOG: A TAIL OF WONDER AND WOE (Very similar to my use-case)

Meteor OPLOG flooding (Great forum thread)

Tuning Meteor Mongo Livedata for Scalability

My experience hitting limits on Meteor performance (Also similar to my use-case)

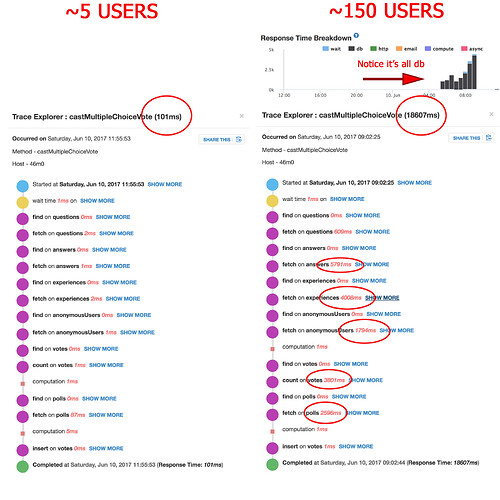

I did a “deep dive” into my Meteor APM (Kadira) data for this latest server slowness and I started noticing a lot of funny things. I found stack traces where meteor_autoupdate_clientVersions was doing a bunch of observeChanges that were really slow. A lot of observeChanges in my app were fine with only a few users but once more than twenty or thirty logged in, the same observeChanges slow to a crawl.

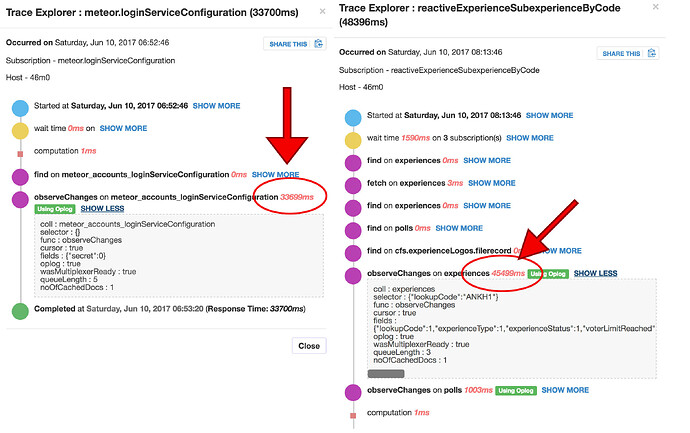

The issue with meteor_autoupdate_clientVersions and meteor.loginServiceConfiguration having long wait times is indeed as I suspected merely a side-effect. If I understand correctly, both of those calls are synchronous on each client… they don’t use this.unblock(). So if my app is loaded up with 150 users and the OPLOG is clogged to all hell and back, those new users who log in get stuck waiting on meteor_autoupdate_clientVersions and meteor.loginServiceConfiguration which is in alignment with what my users were seeing in the app. And there’s always new users logging into my app because my app is very bursty - i.e. it goes from 5 users to 150 or more in about a minute.

Another huge gotcha I discovered is my admin app was actually creating/getting a ton of OPLOG traffic from my non-reactive mobile-client app. My app is actually two apps. An admin app and an end-user mobile-client app, both connecting to the same database. In the mobile-client app, I flame-thrower’d all the reactivity out of it (per my input above) and didn’t understand why the OPLOG was still killing me… well, I didn’t remove any reactivity out of the admin app because there’s only ever maybe five users at any time using the admin app. However, if my assumptions are correct, those five users on the admin app are all processing the reactivity of my 150 mobile-client users. So all those interactions all happening at the exact same time on the exact same object are being multiplied by however many admin users I have. And also still a little bit on my mobile-client app because I had to leave a little bit of reactivity in it. So this was something I didn’t consider… that Meteor was still having to process the OPLOG for my admin app users.

Another weird little gotcha is MongoDB shows no slow queries. Which can’t be true because Meteor APM (Kadira) is showing me all kinds of find() and fetch() calls (i.e. a Meteor findOne()) that are taking 3000+ ms. I checked my slow queries on my PRIMARY mongo node and it showed only about 19 slow queries, each no more than 200 ms, all from several months ago… weird. I checked my SECONDARY mongo node which is what enables OPLOG and the slow queries was empty. BUT, the compose.io help team told me this table can occasionally reset itself when a resync is performed. So there’s a very good chance I’m likely not seeing my OPLOG slow queries being reported. Not sure how often a resync happens between the nodes… seems fairly often as I checked the SECONDARY for slow queries a few days after this latest case.

So now, I’m going to build some diagnostic testing tools to simulate all those users so I can create this storm of activity at any time on my own. Then I’m going to disable the OPLOG and see if I still have this issues without it. If so, then I’ll have to figure out what to do about that. If not, I’ll very likely try to start integrating redis-oplog which makes me very happy because I removed a lot of cool interactivity trying to solve these issues. It would be great to get it back. My only other option is to try some of the Meteor 1.3 disableOplog options on publications (I’m still on Meteor 1.2.1). I’m sure those would do the trick, but then again, I’m losing some responsiveness. In about three weeks I’m going to jump on this full-time and will report back my findings and results.

So this still could very well be a “Mongo Scaling Issue”… more to do with the OPLOG, but still Mongo involved also.

@elie I haven’t upgraded yet because this is for a production app. In the past, whenever I upgrade I always have a package or two that breaks. That was when I was upgrading right away, so now I’ll probably be okay. But again, with a production app, you have to have the time to test and work out any issues, which at the moment I’m short on. I’ll upgrade to 1.5 hopefully in about three weeks.

You should search the forum here, I did an EXTENSIVE test of Oplog for my MMO game.

Oplog is a REQUIRED Meteor methodology. At least it should be. There should be warnings in a billion places while putting it on production.

Very short sited by Meteor, but they’ll probably have a Galaxy based solution in the future.

Yeah I kinda did search the forum… a lot. That was the point in linking all those threads.

What did you use for testing OPLOG? How did you simulate users?

Just because Kadira is showing you a slow query doesn’t mean it’s happening on the mongodb end. From the graphs above you’ve only shown long wait times. I haven’t seen a slow response from the database which you can view in kadira.

And I don’t know what you’re expecting to see on the secondary node. You said yourself that no slow queries have been reported for months. So it could have been reset months ago. Not days ago. But this makes it sound like there’s some misunderstanding around what’s happening with oplog.

The problems with oplog are on the meteor end. That your app has to process a long list of operations coming in from mongodb. If you’re doing observes and have to check each item coming in then that can eat all your cpu if you’re observing a lot of data. Publications will also be observing data.

I’m on mobile and haven’t reread the whole thread over. But are you horizontal scaling? 150 users per instance is the upper limit. I’d try running three instances and seeing what happens.

And upgrade from 1.2. Open a new git branch and try it and check if all works smoothly locally. Push it to the admin side first if you’d like. Then try production. Not sure what the worst that can happen is. Sounds like people are having problems as is anyway.

You mean like this:

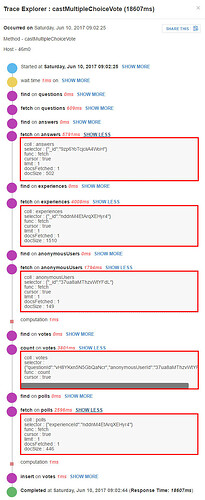

This is one of my Meteor methods that bogs down with a lot of users, but is fine with only a few. So shouldn’t this be showing up as slow queries in MongoDB?

The latest slow incident that happened was on June 10th. What I was saying was that the PRIMARY showed no new slow queries. The SECONDARY was empty for slow queries. So maybe it had been reset in the day or so after June 10th incident. So hence, no slow queries to see from the June 10th incident. I’m probably confused (I’m just an average programmer trying to make a framework work with more than thirty users ![]() ), but if the slow queries above were caused by the OPLOG, wouldn’t they be in the

), but if the slow queries above were caused by the OPLOG, wouldn’t they be in the SECONDARY table of slow queries? Doesn’t the SECONDARY feed Meteor’s OPLOG tailing?

Okay, I wasn’t sure where exactly the OPLOG problems would manifest. If it’s my app and the CPU that are bogging down, would that cause the above methods to be so slow… with the long MongoDB query times? The way I understood it was that adding OPLOG adds a lot of extra queries to Mongo which would slow down my methods (and every other part of the app involving Mongo). Especially because I’m not querying by _id in some cases which adds extra queries (I unfortunately can’t in some cases) and we’re talking about hundreds of updates from hundreds of users… are you sure adding OPLOG doesn’t add any overhead on Mongo?

As for horizontal scaling, I have two small Galaxy nodes processing the 150 mobile-client users and one small Galaxy node for the 5 or so admin users. I was trying to keep this down to test, as the mobile-client is really simple. The only thing that makes it complex is that it’s a lot of bursty users and they all send updates to the same document as the exact same time. But there’s very little Pub/Sub reactivity.

Here’s two Pub/Sub stack traces showing really slow observeChanges times on OPLOG queries… this is direct evidence of OPLOG being the problem no?

And yes, will upgrade very soon.

What happens if you click show more in Kadira for the slow db operations you have?

Can you copy and paste the indexes you’re using as well please. Also, how big many documents does your biggest collection have?

Also, with regards to your index question above, you need compound indexes as explained here:

https://docs.mongodb.com/manual/core/index-compound/

Here’s the same Meteor method call as above with “show more” clicked. FYI, I had emailed the compose.io support team in the past and asked for them to review if I had any COLLSCANS and they said they reviewed the logs and found none.

My largest collections that I query actively have document sizes like: 55093, 15221, 23337, 5316, 2077, and then smaller.

I’m 100% sure I have all my indexes covered, I have a lot of them (unless I’m doing something wrong with compound indexes). If it were my indexes that were the problem, wouldn’t my queries with only a few users be slow as well? Here’s a few of my indexes that I added in relation to the above stack trace. I’ve added no new indexes if I only query by _id:

Answers._ensureIndex({experienceId: 1});

Answers._ensureIndex({questionId: 1});

Answers._ensureIndex({userId: 1});

Answers._ensureIndex({userId: 1, experienceId: 1});

Answers._ensureIndex({userId: 1, experienceId: 1, anonymousUserId: 1});

Experiences._ensureIndex({lookupCode: 1}, {unique: 1});

Experiences._ensureIndex({userId: 1});

Votes._ensureIndex({userId: 1});

Votes._ensureIndex({experienceId: 1});

Votes._ensureIndex({questionId: 1});

Votes._ensureIndex({answerId: 1});

Votes._ensureIndex({anonymousUserId: 1});

Votes._ensureIndex({userId: 1, experienceId: 1});

Votes._ensureIndex({anonymousUserId: 1, experienceId: 1});

Votes._ensureIndex({userId: 1, experienceId: 1, anonymousUserId: 1});

Polls._ensureIndex({experienceId: 1});

Is too many indexes a problem? I’ve added one for each way I query each collection.

Too many indexes can be a problem if not everything fits into memory and

then the database has to start reading from disk.

You do have some indexes that aren’t needed because you’re using compound

indexes.

Can compose tell you what the slow queries are? Even if it’s not a coll

scan it can be a difficult query for the database if it has to look over

many items. Although not too sure that’s happening here.

Also, the items kadira told you are causing a long time, there’s no way

they are the cause of the issue. Searching for an item by id will always be

quick. The reason each of those queries is taking a long time is that

something else is happening in the database which is slowing it all down.

There is a query that is locking up the entire database if the kadira

information is correct.

If you can look at the compose logs while this is all happening you may be

able to find some additional information.

I’m at a computer now, so can explain a little more.

Almost certain that the last of those 3 compound indexes covers the first two.

Mongo can use:

Answers._ensureIndex({userId: 1, experienceId: 1, anonymousUserId: 1});

for both:

Answers._ensureIndex({userId: 1, experienceId: 1});

and

Answers._ensureIndex({userId: 1});

Also, have a look at my article here to see what you can do in real time when the problem is happening:

I had queries running for 10+ seconds:

The issue wasn’t with that specific query. The entire database was locked up because of other things happening in the db.

Thanks for the advice on the indexes… I’ll look into that. Does it help or hurt anything to remove unneeded indexes from the indexes table in Mongo if they’re redundant?

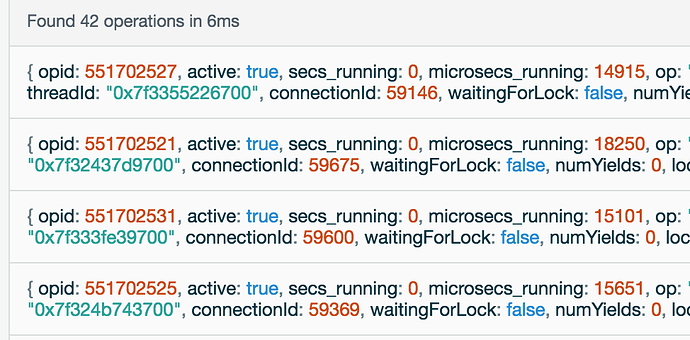

Also, I’ve read your article several times. As I mention way above in the thread, I’ve never understood how you jump from the “Current Ops” window in Compose that shows your slow queries to which actual queries are slow. The “Current Ops” window doesn’t really identify them. Mine all say op: "getmore", ns: "local.oplog.rs", query: {}.

Yes it does help to remove unneeded indexes. Each index you have takes up

more RAM. The database basically has to keep some kind of cache for each

index you have.

I don’t know if this could be your problem. You can check your ram usage.

But in general you don’t want unneeded indexes.

So, I think I’ve figured out what the issue was. Turns out the problem was my Meteor server (1 Galaxy Compact Container 0.5 ECUs) getting pegged at 100% by any burst of forty+ users. I switched my containers to Quad and cranked them up to 12 instances and had beautiful performance with hundreds of users (and can actually get away with much less containers). I think there’s still more problems and bottlenecks, but I know now it’s not Mongo because Mongo works great once I up my containers. No problem with Mongo response or Oplog. Hundreds of users logged in and were using the app in under 1 second response for all of them. I think it’s all the stuff Meteor delivers on first download of your app. I think that eats up a lot of bandwidth on the server.

The new problem is that running so many Quad Containers is too expensive. Using 1 Compact Container it only takes about forty simultaneous logins to cause my server to slow to 30+ second responses. This seems like pretty poor performance. All the above problems with Kadira and the database were likely all a side-effect of the Meteor CPU being pegged.

I started a new thread about the load tests I did to reproduce the problem and what my results were. It’s a long read but it’s probably helpful to anyone reading this thread:

Thanks for all your help. I appreciate it!

The stuff meteor delivers on first download can be put in a cdn like

cloudfront

Okay I read your other post. The issue wasn’t cdn stuff. And happy you finally realised that kadira was misleading and that you weren’t having db issues as I tried to point out above.

Just be careful. With Redis oplog we had some weird cases of the event of update being fired but the data was not available in the client. As soon as we turned it off the default reactivity worked again.

And if you use namedContexts with SimpleSchema, there is currently a Pull request with a bug fix for it.