I’m afraid I cannot yet update to 3.1.1 beta, since I really need to be able to use montiapm in my project, an this bug here keeps me from doing so.

Search how to download and analyze a memory heap snapshot of your node server. Everytime I’ve done this and seeing the objects using memory, I normally discovered two things:

- That this object shouldn’t be occupying that amount of memory

- Looking at code where the object is used, it becomes obvious why the memory leak happens

The only caveat is if the memory leak does not happen on your own code but on a package/library/framework you are using e.g. it’s not that obvious why but normally, it’s obvious that this object should not be using this amount of space

That’s very good strategy.

Memory leaks problem usually got noticed only in production server because we run the app long enough.

It looks like most of memory leaks issues come from using closures. There is a video about it and TBH on the first time watching the video I didn’t fully understand.

Thanks for that hint, that’s exactly what I meant. I added heapdump to my project and are currently trying to get heapdumps off my machine, for further investigations. However, heapdump seems to be quite resource intensive…

Thanks, will try to get as much knowledge from that video as possible ![]()

In my case, in my staging env the issue doesn’t occur, but on that server I don’t have many sessions (only 0-2 maybe), so I guess the issue is related to client connections.

(also cc @paulishca)

I saw that you mention a REST API in MontiAPM Agent interfering with http requests?. If you plug your REST middleware with WebApp.handlers.use(), read on.

Our app is light on MongoDB usage, but we proxy thousands of requests per minute using http-proxy, to a backend service. While experiencing steady memory growth, also noticed a slowdown in the proxied requests after a certain memory threshold.

The problem is that in our case it was the resident size (RSS) growing due to external memory - the heap is relatively stable under simulated load, for instance.

I realised WebApp.handlers, as opposed to WebApp.rawHandlers, runs all callbacks potentially added by the app and third-party packages, with those being inserted before the default handler (for an example, see Inject._hijackWrite in meteorhacks:inject-initial/lib/inject-core.js - that would be called for every request hitting the middleware plugged in WebApp.handlers). Recently, after mounting the proxy with WebApp.rawHandlers.use() there has been a dramatic drop in what clearly was a memory leak.

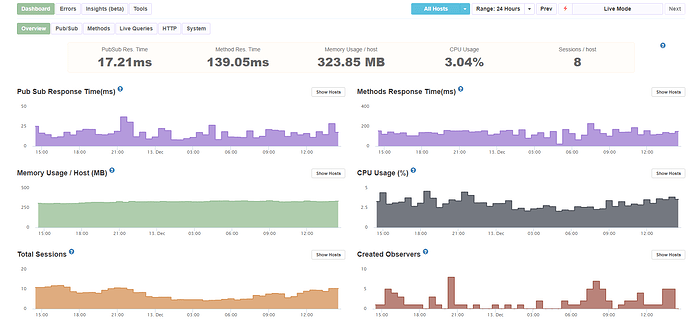

However, there was still something going on, and only after upgrading to 3.1.1-beta.1 I noticed the memory being more stable, though I have only been running this particular instance on the beta version for 25 hours.

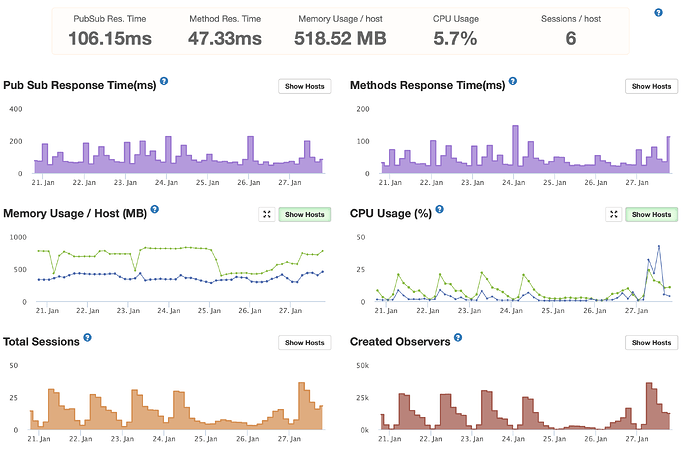

So far, there is still a small upwards trend, if you ask me:

Thanks for your reply! Right now I am still using express via a npm package, since this was neccessary in 2.16. Now as Meteor uses built in express, I will refactor this to use WebApp.handlers; at least that’s what i tried. In the github issue related to the post you mentioned you’ll find a repo with a branch that shows, that there are issues related to montiapm:agent, if you do WebApp.handlers.use().

So I will play around with rawHandlers to see if this helps, so I can upgrade to 3.1.

I’m analyzing a heapdump comparison of my Meteor app and noticed a significant number of Date objects remaining in memory. Namely those are _createdAt meta data properties from documents in my db, traced through MongoDB’s OplogObserveDriver, _observeDriver, and related internal mechanisms.

It appears these Date objects are part of observed collections or documents in Mongo, and they’re not being released from RAM. While these may involve observeChanges or similar reactive mechanisms, the total retained size is growing significantly. (Alloc size is a 27MB increase via a delta of 270k new Date objects.)

I’ve been drilling my machine with Meteor-Down to put some load on it and while this went ok with nothing remarkable, the machine takes ~40MB per day.

I would confirm that yes, Meteor 3.1 has this problem (for me) on a mostly idle machine.

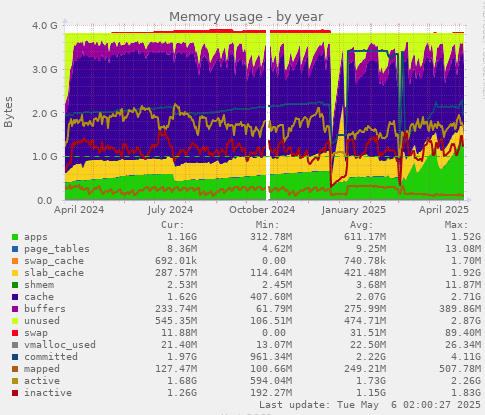

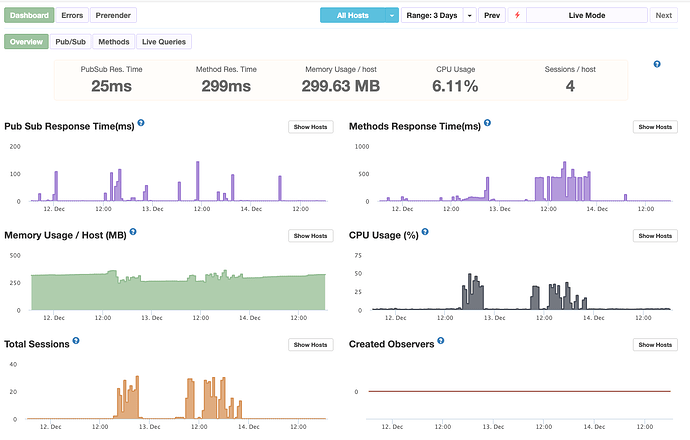

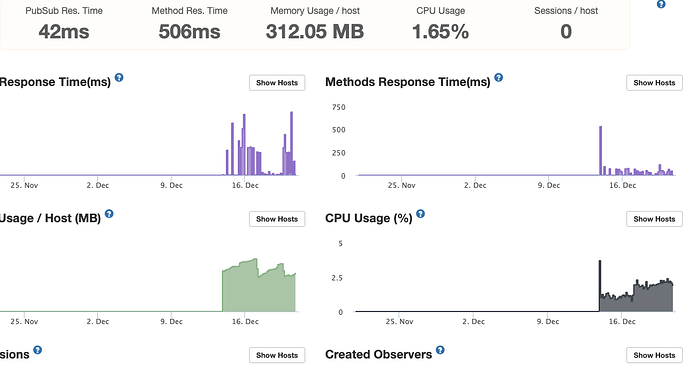

A week trend:

That deserves an issue report for the core team to investigate. Do you think this can be reproduced with a minimal subscription code?

Yes maybe, although I’m not sure whether this is due to my implementation or an effect that’s originated in the core of meteor. I also find it hard to reproduce, since it only becomes obvious on busy server. Is there a best practice solution on how to emulate load on a dev/staging server?

You can check this post Meteor 3 Performance: Wins, Challenges and the Path Forward - #16 by nachocodoner

Don’t have any experiences yet with artillery, but it sounds fun; will give it a try. In the meantime I will check if 3.1.1-beta.1 will help on that issue; I can upgrade now, thanks to @zodern’s latest update of montiapm agent beta 10.

Update: Just tried to run my server tests on github; however, they fail due to reached heap limit (2GB). Never had a memory issue on mocha tests before.

As I understand, the memory leaks in your project began appearing between versions 2.16 and 3.0.4, correct? Are you saying 3.1.1-beta.1 made things worse, or was the issue already significant? Did this occur as part of your app’s runtime?

You now mention issues with server tests. Are you referring to problems when running meteor test or meteor test-package, right? Did this also happen with version 3.0.4? Are the memory issues reaching heap limits consistently? Does it occur repeatedly during local runs? Did your CI pipeline previously cache data? I’ve encountered test-related issues that resolved after adjusting CI caching. Meteor caches data during test runs, making subsequent runs faster and less resource consumption, especially for large projects. Could this be similar for your setup in 2.x and could be failing now on 3.x?

I haven’t experienced memory leaks with Meteor 3. This might be related to a package or specific setup of your app. Could you share a list of the packages you’re using? You can also reach out privately on the forums if you prefer. On your end, try removing packages incrementally to isolate the issue in both runtime and server tests. For example, temporarily remove montiapm-agent and observe any improvements, continue with other packages that can easily be removed or adapt those quickly.

Keep us updated as you troubleshoot. Your feedback on the migration to 3.0 is valuable and will help others.

Yes, the leaks started right after upgrading from 2.16 to 3.0.4.

I couldn’t test 3.1.1-beta.1 yet, since my CI/CD requires all tests to pass before deployment, and tests via meteor test and mocha fail due to insufficient memory (no issue on 3.0.4, neither 3.1.0). I will check wether local tests also fail. I have no caching in my ci pipeline.

I now have 3.1 up and running and expect a busy day. First impression is that memory usage on web containers is significantly better; I’ll report later how memory got freed after todays usage.

This is my packages file

meteor-base@1.5.2 # Packages every Meteor app needs to have

mobile-experience@1.1.2 # Packages for a great mobile UX

mongo@2.0.3 # The database Meteor supports right now

reactive-var@1.0.13 # Reactive variable for tracker

standard-minifier-css@1.9.3 # CSS minifier run for production mode

standard-minifier-js@3.0.0 # JS minifier run for production mode

es5-shim@4.8.1 # ECMAScript 5 compatibility for older browsers

ecmascript@0.16.10 # Enable ECMAScript2015+ syntax in app code

typescript@5.6.3 # Enable TypeScript syntax in .ts and .tsx modules

shell-server@0.6.1 # Server-side component of the `meteor shell` command

hot-module-replacement@0.5.4 # Update client in development without reloading the page

static-html@1.4.0 # Define static page content in .html files

react-meteor-data@3.0.1 # React higher-order component for reactively tracking Meteor data

accounts-password@3.0.3

alanning:roles

ostrio:files

session@1.2.2

check@1.4.4

http@3.0.0

mdg:validated-method

email@3.1.1

aldeed:collection2

percolate:migrations

cinn:multitenancy

tracker@1.3.4

meteortesting:mocha@3.2.0

dburles:factory

accounts-2fa@3.0.1

bratelefant:meteor-api-keys

dburles:mongo-collection-instances

leaonline:oauth2-server

accounts-passwordless@3.0.0

matb33:collection-hooks

montiapm:profiler@1.7.0-beta.2

montiapm:agent@3.0.0-beta.10

Roles, multitenancy, oauth2, and files are local packages.

All my production apps on 3.1.1-beta are still seeing constant increase in memory and under the new Meteor version the “idle” CPU is double than what I had under 3.1.

Lower levels of memory are time when I deploy a new bundle. Will leave it now over the weekend to see where it goes.

Quick update since now my service is experiencing some load again from the users after holidays. Updated to 3.1 and memory leaks in production seem to be resolved.

However, if I update to 3.1.1, my mocha tests still fail because memory is running out (even if I set it to 8GB). No issue if I downgrade to 3.1.

Same here v 3.1.1, gained 70MB in 2 days, after a new deploy, with very light usage. Once it goes up, it doesn’t come down.

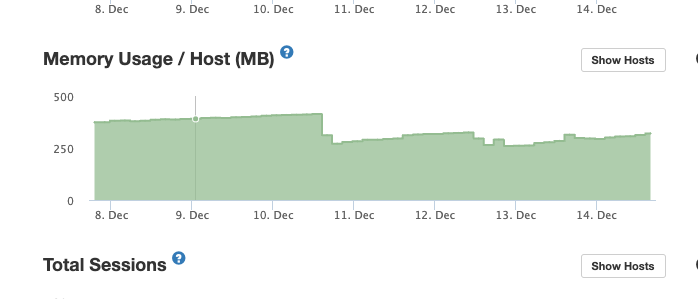

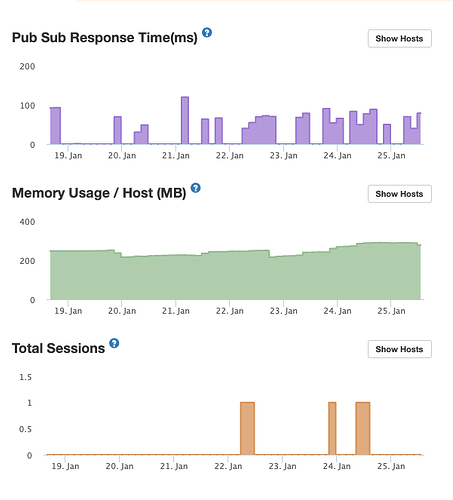

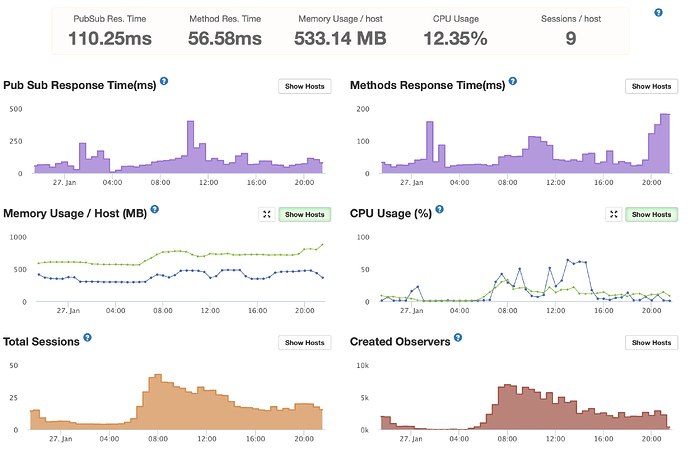

Also wanted to add to screenshots as well, one is last 7 days and one is from today (was quite busy; the blue line in the memory diagram is a queue worker, green one is web). Had deployed on 21. Jan, 23. Jan and 25. Jan.

Just upgraded to 3.1.1 in production, and really curious how tomorrow will go ![]() RSS memory is really high, but heap seems ok right now.

RSS memory is really high, but heap seems ok right now.

Same happening here. Self hosing. RAM usage of the mongo service goes up untill mongo crashes and gets restarted automatically. Happens on all our servers that we update to Meteor v3

Source code: Openki / Openki · GitLab

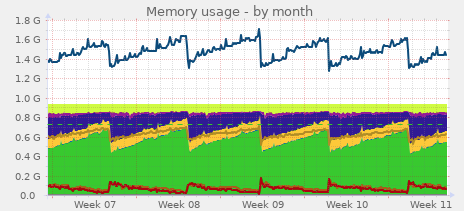

Other machine: Guess where we updated to v3: